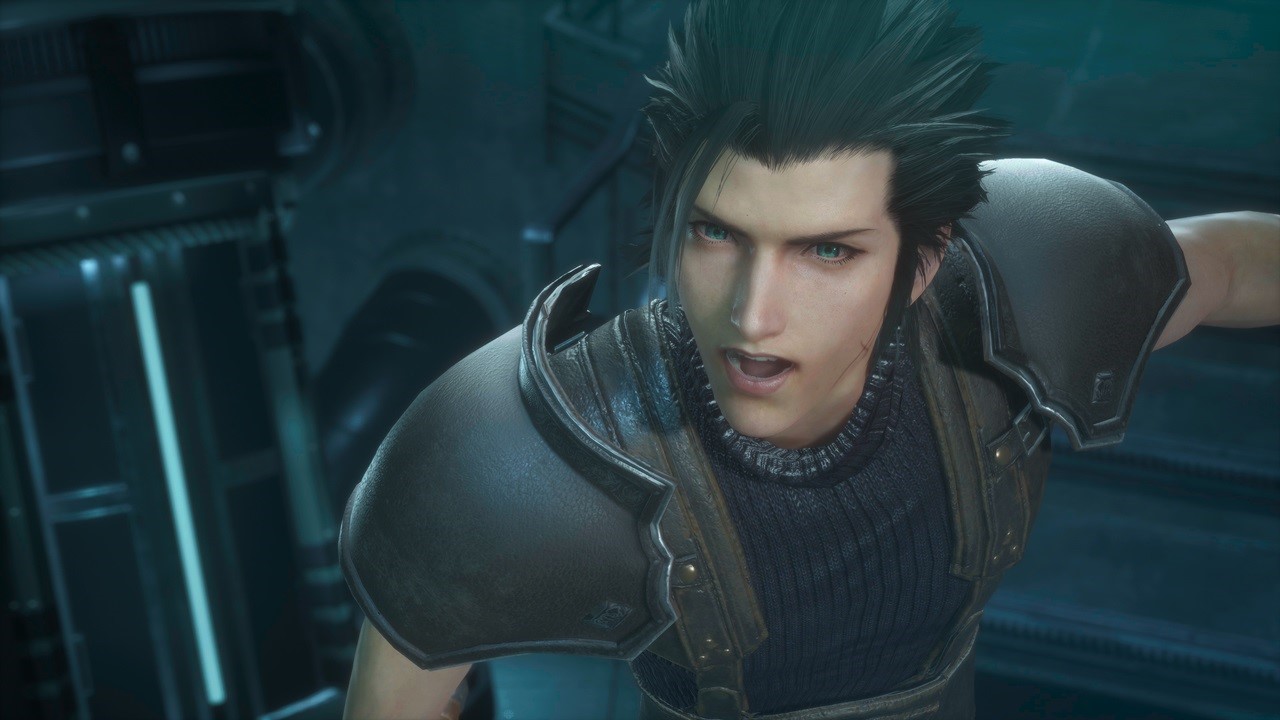

How’d they do it? Crisis Core -Final Fantasy VII- Reunion’s small video sizes and fantastic lip syncing [PR interview]

Square Enix released Crisis Core -Final Fantasy VII- Reunion in December 2022. The game is a remastered version of Crisis Core -Final Fantasy VII-, originally released for PSP, and has earned a “Very Positive” status from user reviews on Steam.

The game’s development team managed to successfully remaster this 15-year-old title for a wide range of platforms: PS4, PS5, Xbox One, Xbox Series X|S, Nintendo Switch, and PC (Steam). That is no simple accomplishment. For this interview, AUTOMATON was joined by members from CRI Middleware—who contributed to the game via their audio and visual technology—to ask staff from Square Enix and Tose about the challenges and innovative approaches taken during the development process.

The following members took part in the interview. We have listed both their official position and the role they assumed for this particular game.

Square Enix

Project manager (producer): Mariko Sato

Game designer (event director): Masaru Oka

Tose

Manager (project manager): Kenjiro Kato

Manager (program director): Haruyuki Yamaguchi

Manager (sound manager): Masato Nakamura

Please note that Crisis Core -Final Fantasy VII- Reunion utilizes some of CRI Middleware’s technologies, including the sound middleware CRI ADX; the high-compression, high-quality video middleware CRI Sofdec; and the voice analysis lip sync middleware CRI LipSync.

*This article is based on a sponsored interview posted to the Japanese edition of AUTOMATON on April 8 (JST).

Unreal Engine 4 chosen for its multi-platform compatibility

――Please introduce yourself and tell us about your role for this game.

Sato (Square Enix):

I’m Sato, the producer for Crisis Core -Final Fantasy VII- Reunion (hereafter referred to as CCFF7R). I have worked as a project manager in recent titles, but for this game, I was involved as producer.

Oka (Square Enix):

I’m Oka, the event director for CCFF7R. I was also involved in the development of the original version of this game. My background is mainly as a game designer, but I also worked on scenarios and cutscenes for the Kingdom Hearts series. When I first entered the industry, I was involved in a game called Treasure of the Rudras.

Kato (Tose):

I’m Kato, and I worked as the project manager for CCFF7R. My career started with QA management, but gradually shifted to planner* and management roles. I have a long-standing relationship with Square Enix, and worked as both project manager and sound director on World of Final Fantasy.

*Planner: A job title akin to a game designer in the west.

Yamaguchi (Tose):

I’m Yamaguchi, program director for CCFF7R. I joined Tose in 2004, and have mainly been involved in developing console games, but I have also worked on a wide range of projects such as mobile games and cabinet-style game machines.

Nakamura (Tose):

I’m Nakamura, sound manager for CCFF7R. I joined Tose in 2001 and have been involved in mobile, cabinet-style game machine, and console titles. The sound team currently consists of 9 members, with me as the manager. For this game, I was in charge of sound programming and also worked as an advisor on other technical aspects.

——Please tell us about Crisis Core -Final Fantasy VII- Reunion.

Sato (Square Enix):

Crisis Core -Final Fantasy VII- was originally released in 2007, but I believe this new version goes beyond a simple remaster. In addition to visual upgrades, such as the complete overhaul of 3D models, the game has been fully transformed with changes made to the battle and other key systems, as well as the addition of full voice support and newly arranged music.

——How did the collaborative relationship between Square Enix and Tose begin?

Kato (Tose):

We have been collaborating on this project from the very beginning. Even in the pre-production phase, we were working together to run tests and inspect as many aspects of the project as we could, all with the goal of doing everything in our power to make this remaster as great as it could possibly be. At the end of the pre-production stage, it was decided that we would proceed with development using Unreal Engine. The reason was simple: this game had to be compatible with multiple platforms.

——As this is a remaster, I take it that the inspections you mentioned were done on the original version of the game. How did you proceed with this process?

Kato (Tose):

Since the original version was made for the PSP, it was developed with special consideration for that hardware’s characteristics. For example, one thing we had to do at the time was make sure the data on the UMD (Universal Media Disc) was organized in a certain way so as to minimize the movement of the lens and ensure smooth loading during gameplay. Analyzing this data structure was one of the areas we started with.

Yamaguchi (Tose):

That said, the original version was created using C++, and because UE is also mainly based on the same language, we were able to modify the original for use with UE by adding support for 64-bit addresses and cleaning up the reading processes. Our company has been involved in game development and porting for a long time, so our knowledge in this area helped us progress through this verification process smoothly.

The implementation of full voice support re-accentuates the charm of the characters

——You mentioned your desire to make this remaster as great as it could be. Could you tell us which part of the sound production you considered to be the most important?

Kato (Tose):

The thing we wanted to do most was add full voice support.

Sato (Square Enix):

Yes, I think the implementation of full voice support is a truly distinctive evolution. Scenes that only included text in the original version are now packed full of emotion. I believe it allows the players to experience those parts of the story in a new light, hopefully invoking reactions such as, “I didn’t realize Zack spoke with such emotion,” or, “The interactions with Aerith are so much more divine.”

Nakamura (Tose):

Implementing full voice support was certainly important, but I think remaking all the background music was a big addition to the remaster as well. Recording new arrangements of popular songs from the original game was huge, and definitely something I hope everyone will enjoy.

——Are there any specific scenes you personally love?

Kato (Tose):

The opening scene. As I watched the battle scene play out in high definition, it suddenly occurred to me that, “Wow, I’m actually involved in a FF7 derivative title.” It’s a scene I remember well from the PSP version, so I felt a sudden wave of joy watching it. Another memorable scene for me was the first meeting with Aerith. The original version included voice acting as well, but seeing the remastered version was a particularly powerful moment.

It’s not just us creators who have fond memories of the original, though. The game holds a special place in a lot of players’ hearts, and we were keenly aware of our responsibility to those memories. At the same time, however, we also wanted to do our best to provide an enjoyable experience to those who were completely new to the series.

Yamaguchi (Tose):

I personally found the scene right after the opening sequence, when the camera pans down and the player is first able to take control, to be particularly impactful. It took quite a bit of time to modify the PSP source code to run properly on UE, so I was absolutely thrilled when this scene worked for the first time. I also used this scene for adjusting and setting the overall visual presentation of the game, so I ended up watching it many times. As a result, I developed a special attachment.

Sato (Square Enix):

I found the interactions between Zack and young Yuffie quite impactful. In the original version, these scenes were not voiced, so hearing them with full voice acting made me realize, “Wow, their exchanges are so comical.” The performance of the voice actors really managed to capture the stiffness of Zack’s dialogue against Yuffie’s seriousness. I really enjoyed watching these scenes as I did the quality checking.

Oka (Square Enix):

My personal favorite is the scene where Zack buys a ribbon for Aerith. The original version wasn’t voiced, so getting to hear it in this version made it so much more emotional.

——As a player, I was certainly happy to see how much the event scenes and battles improved thanks to the addition of voices. Were there any other changes you had in mind for the game that you eventually decided not to implement?

Nakamura (Tose):

We had originally considered making it possible for the player to switch between the newly arranged background music and the original soundtrack. In the end, however, we decided not to implement that feature.

Sato (Square Enix):

We made sure to maintain the atmosphere of the original music when re-arranging the BGM for this version. As a result, we decided to prioritize the enjoyment of the game as a single, completed work rather than offering choices that could potentially take away from the player experience.

Kato (Tose):

When it comes to game development, the end result improves the more you work on a title. There were many things we wanted to do, but we also decided to focus on the fact that we were creating a remastered version, not a remake.

One advantage of developing with CRI ADX is that even though the environment has changed, you can still create sound the same way

——How many people were part of the sound team for this game?

Nakamura (Tose):

There were six people in total. I served as management and implementation lead, there was one person in charge of direction and production lead, and there were four other members who worked on SE production. While some of the members changed along the way, there were never more than four working simultaneously. Since the BGM was arranged by the original composer, Takeharu Ishimoto, our team was able to focus all our efforts on sound effects production.

——This game utilizes UE4 and ADX. Are there many other projects within the company that do the same thing?

Yamaguchi (Tose):

Yes, there are many projects developed with UE that also utilize ADX* for the sound system. We have been using ADX and Sofdec** since long before we started developing with UE. The implementation methods have been the same for a long time as well, which made the transition to UE quite smooth. Normally, implementation methods change significantly after switching to a new development environment, so it’s extremely fortunate that we could make use of the same workflow as before when creating sound and video.

*ADX: Sound middleware provided by CRI Middleware. It enables simple implementation of diverse sound production required in game development.

**Sofdec: CRI Middleware’s movie middleware. It is capable of playing highly compressed, high-quality video.

——Development for this game made use of the original version as its foundation. Did the sound development make use of the original sound system and its assets?

Nakamura (Tose):

Since the sound team was involved in the project from the very beginning, we started by analyzing the PSP version. We added debugging functions to the original version and proceeded with our checks. After that, the programmers led the work on porting everything to the current system. Once the sounds from the original version could be played without problems, we started work on re-creating those sounds so as to match the game’s updated visuals.

The magical effects and some other assets are based on the original version, but many of the sound effects, such as those relating to character movement, have been completely reworked. For sounds related to animation, we used the playback timing defined in the animation data of the original version. We used UE’s TimeLine to create the triggers which tell the game when to play each sound effect, while everything else utilizes the specifications from the original version.

As for environmental sounds, they were not present in the original version of the game. We used the features within UE to set these up. Figuring out how to fit pieces of the original code together with elements developed within UE was like putting together a puzzle. This made it rather enjoyable.

——The 3D models for this version have been completely redone. I’m assuming this, along with the implementation of full voice support, made accurate lip syncing a necessity. Could you explain your reasons for using CRI LipSync*?

*CRI LipSync: CRI Middleware’s lip sync middleware developed for analyzing speech. It is used to automatically generate natural mouth movement from speech data.

Yamaguchi (Tose):

Since we largely used ADX for everything relating to sound, we looked into using CRI LipSync because it could be used in conjunction with ADX. We considered other lip sync systems as well, but the results we got from CRI LipSync were so good that we decided to move forward with it. Both its tools for generating lip sync data and its libraries work seamlessly with the UE and ADX workflows, which made it incredibly easy to implement.

Its accuracy was also impeccable. CRI LipSync offers two types of analysis: pre-analysis* and real-time analysis**. During our tests, most scenes had no problems when using the latter. However, motion interpolation sometimes caused timing discrepancies to arise in certain scenes with fast-paced dialogue. This also led to unintended mouth movements showing up in dialogue with sighs, for example. In the end, we decided to use pre-analysis.

*Pre-analysis: A method of generating lip sync data by analyzing audio files and outputting the resulting mouth movements as a file. It’s suitable for creating lip syncs with attention to detail, as adjustments can be made manually.

**Real-time analysis: A method of generating mouth movements for characters in real-time while playing back audio. This method enables highly accurate lip syncing without the need for manual adjustment.

——Did you perform post-process edits on the data analyzed in this way?

Yamaguchi (Tose):

To counteract the timing discrepancies caused by motion interpolation, we removed frames from the beginning of animations so that mouth movements would start sooner. I think the ability to manually manipulate the data from the pre-analysis in this way is a great feature.

Nakamura (Tose):

We sometimes prepared separate waveforms for analysis, rather than using the ones from the tool. This was particularly useful for noises such as breathing and sighing, because the lip sync generator would react to them as if they were actual conversation. To get around this, we would cut out the breathing sections of the waveforms before running them through analysis, then insert the regular versions of the data that included breathing later during voice encoding.

——This game is available in multiple languages. Does the lip sync data differ depending on the language being spoken?

Sato (Square Enix):

Yes, the lip sync data is tailored to each language. This game is fully voiced in Japanese and English, and the generated movements are based on the data analyzed from the voices being used.

As for the text, in addition to the EFIGS (English, French, Italian, German, Spanish) supported in the original version, the game can now also be enjoyed in Simplified Chinese, Traditional Chinese, and Korean.

Oka (Square Enix):

Speaking of localization, the original version also had English audio. This meant we had to manually adjust the timing and subtitles for each event. For example, there were some scenes in which the English audio ended up being longer than the Japanese. As a result, it wouldn’t fit the length of the scene. In these instances, we would have to individually edit each scene, often forcefully extending the timing of the event scene to accommodate an extra 100 frames or so. It was quite the undertaking.

Nakamura (Tose):

For this game, we made sure the translations were done in a way that the number of pages (the number of times the subtitle window appears) would be the same for each language. This made adjusting the timing much easier. In game development, it’s common to have situations where the Japanese dialogue fits into three text boxes (meaning it takes three button presses before the scene ends), whereas in another language, it takes five text boxes to display the same conversation (and therefore five button presses). For this game, however, we made sure the translations were done so that the text windows would correspond in a 1-to-1 ratio, and were therefore able to reuse the scripts and motions for every scene.

Sofdec was essential for reducing video file size

——I’d like to ask about multi-platform support. I was surprised to learn that this game not only supported PS5 and Xbox Series X|S, but also Nintendo Switch. Was it necessary to make any special changes in terms of specifications? How was the sound support?

Nakamura (Tose):

We had to limit the number of sounds being played simultaneously to a maximum of 64, and so had to put a lot of thought into which sounds we could remove from each scene. We actively used ADX’s REACT to implement features such as category queue limits and ducking functions to help remove as many unnecessary sounds as possible.

*REACT: a mechanism for designing changes in volume and other sound elements. It allows for control such as lowering the volume of BGM during dialogue playback.

This game is action-packed, so it’s necessary to play many sounds in a very short period. This became a problem in terms of processor load as well, with disk access and CPU speeds being the main bottlenecks. To avoid the need to read data every single time a sound needed to be played, we developed a method of pre-loading and storing sound data for later use, therefore reducing the load on the processor.

Yamaguchi (Tose):

We were also careful about compressing video files, as the ROM capacity differs by platform. As a side note, we used Sofdec to compress the game from 50GB to 20GB even for platforms with no capacity issues in order to reduce download times.

Nakamura (Tose):

We were able to keep the total size of the video files down to around 2 or 3GB. As for the codecs, H.264 was used for PS4/PS5 and Xbox One/Xbox Series X|S, while VP9 was used for Steam and Nintendo Switch. We calculated sizes repeatedly during development and were worried that it may end up too big for the ROM, but everything worked out in the end.

The graphics aren’t particularly GPU-intensive, and there is no difference in processing functions between any of the devices. Knowing how compact to keep the core of the game was important, and the Sofdec library is written with that in mind. I think this makes it an easy tool for creators to work with.

——Thank you very much. Lastly, could you please share your overall impressions of CRI Middleware’s toolset and support system?

Nakamura (Tose):

This was actually a sort of test of the technical capabilities of the lip sync tool. As I mentioned earlier, CRI LipSync uses pre-analysis, and we would embed the analysis results into the sound files. We would then read both the sound data and lip-sync data at the same time, and pass those results to the game program.

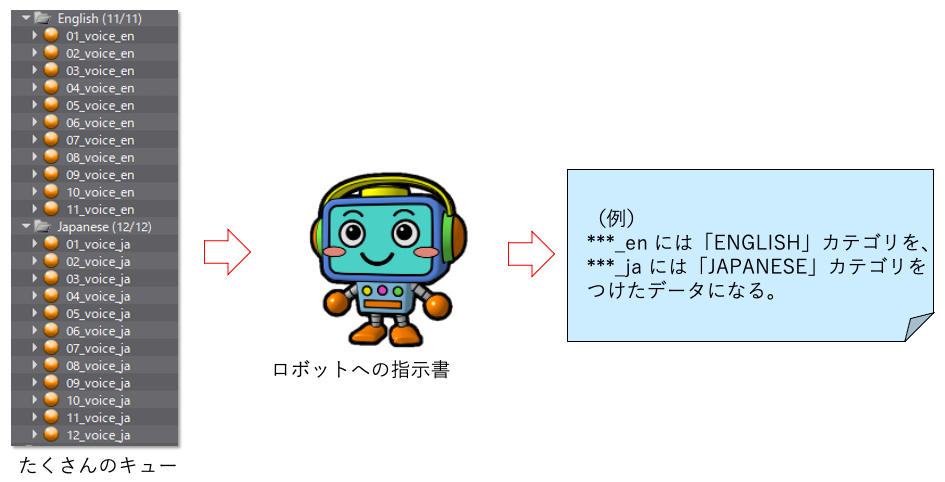

This CRI LipSync feature was first introduced as a test feature at CEDEC 2019. Despite it not yet being an official version, we spoke with CRI Middleware about it and they provided us with a patch. As a result, we had to sort of hack the software, observing the data closely and adjusting parameters as needed. We were also able to incorporate automation as much as possible, so we have high expectations for the CRI Atom Craft Robot* function in the future.

*CRI Atom Craft Robot: A function that allows you to automate CRI ADX’s authoring tool “CRI Atom Craft” according to whatever rules you define. It can automate various manual tasks such as registering a large amount of sound data or changing settings for registered sound data.

text in the middle: Set of instructions to provide the robot

text on the right side: (Example) ***_en files are assigned the “ENGLISH” category, and ***_ja files are assigned the “JAPANESE” category.

In terms of support, they respond quickly and are always incredibly helpful. Whenever we made a request for something we’d like to do, they immediately come back with, “You can use this function to do that!” I’m constantly being surprised at the versatility of the ADX library.

——Thank you for your time.

■About CRI Middleware

CRI Middleware offers sound and video middleware to game developers. Gamers will probably recognize them by their blue CRIWARE logo that appears in over 6,500 games that already use their technology.

■About CRIWARE

CRIWARE consists of audio and video solutions for a multitude of platforms including smartphones, game consoles, and web browsers. More than 6,500 titles are powered by their technology. For more information, visit their official website.

© 2007, 2008, 2022 SQUARE ENIX CO., LTD. All Rights Reserved.

CHARACTER DESIGN: TETSUYA NOMURA

Translated by. Braden Noyes based on the original Japanese article (original article’s publication date: 2023-04-08 12:00 JST)