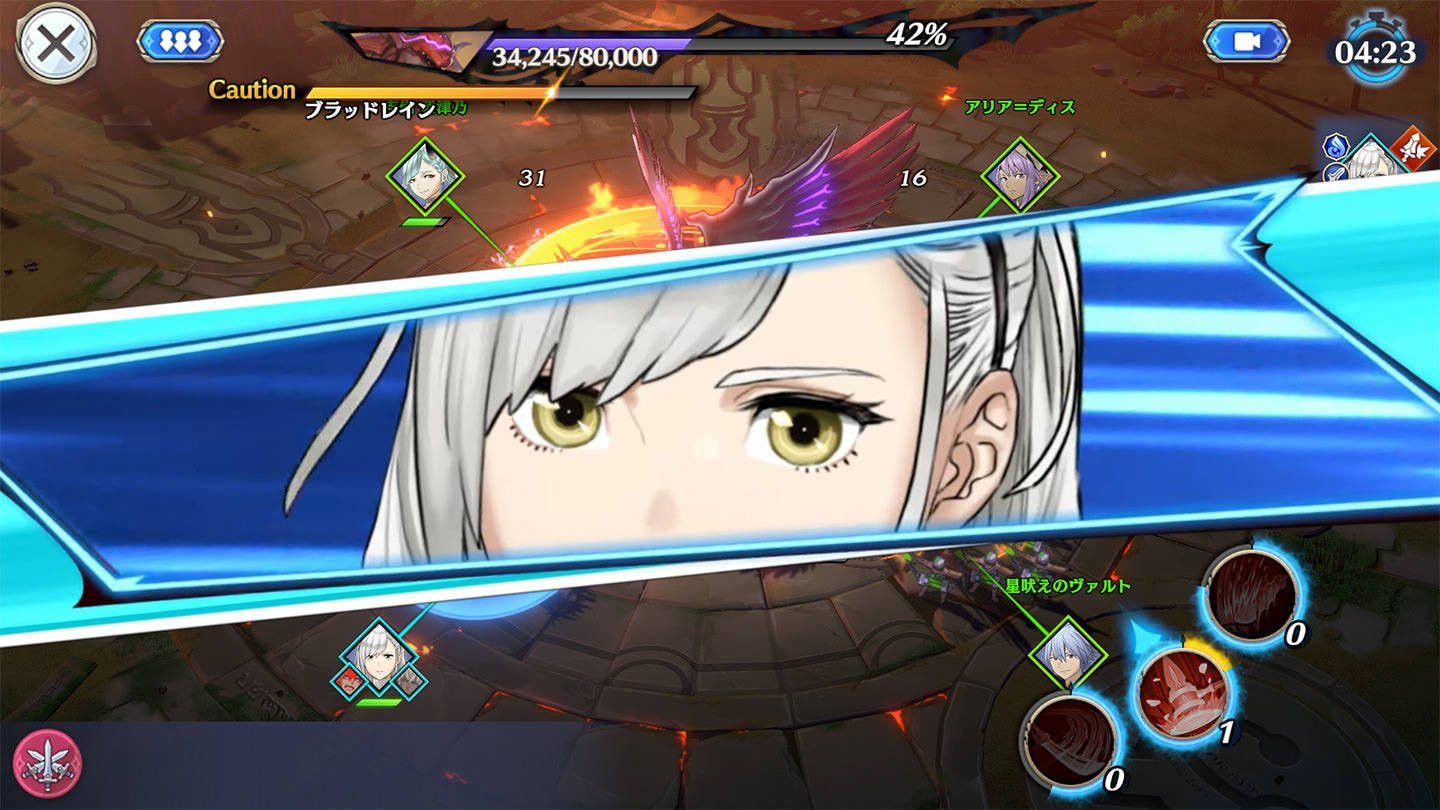

Square Enix’s free-to-play strategy RPG Emberstoria (Japan-only release) features large-scale real-time battles and elaborate character designs by Yusuke Kozaki of Fire Emblem fame. But an unexpected technical feat of the game is the way its characters talk. If you look closely, you’ll notice that the characters’ mouths aren’t simply opening and closing. Instead, they’re moving according to each vowel being pronounced, which is something you rarely see in mobile games.

This is thanks to Motion Sync, a powerful lip-syncing feature introduced in Live2D Cubism 5.0, which Square Enix and co-developer Orange Cube implemented just six months before Emberstoria’s launch. In a joint interview with Square Enix, Orange Cube, and technology collaborators from CRI Middleware and Live2D, Emberstoria’s dev team talked to us about why they made this last-minute decision and how it works.

Traditional Live2D lip sync simply reacts to audio volume, meaning when the speaker is louder, the mouth opens more. But Motion Sync analyzes the actual vowels being pronounced (“A, I, U, E, O”) and dynamically blends preset shapes to match the dialogue. It’s powered by CRI LipSync, a voice-analysis engine developed by CRI Middleware.

“Just playing the audio in Unity is enough. You don’t need to pre-animate anything, Motion Sync handles it in real time,” explains Live2D engineer Soma Tanaka. That means not only smoother and more realistic mouth movements, but also a huge leap in efficiency for the devs, as changing voice lines or localizing to other languages doesn’t require redoing animations. This seems to be an especially important point for a live-service game that’s constantly being updated with new content.

Orange Cube’s Koji Hosoda spotted Motion Sync’s potential early, long before Live2D 5.0 was officially released. But at the time, the feature was too early-stage for use in development. As a result, the team decided to hold off until about six months before Emberstoria’s launch.

At that point, Hosoda quickly integrated Motion Sync into Emberstoria and sent Square Enix a test build before they even fully greenlit the idea. Hosoda-san moved incredibly fast,” recalls content director Makiko Imamura. “He’d already confirmed it would work before we even started seriously considering it.”

Because the implementation came late, the team focused on using Motion Sync in the game’s opening cutscenes, as it’s the first thing players see. “We wanted to make the first impression impactful,” Imamura added.

But why go through the trouble of adding Motion Sync at such a late mid-development stage? According to Hosoda, “With the old system, the characters’ mouths looked clipped and too lip-sync-ish.” But the dev team wanted to push Emberstoria’s visuals further, with nuanced speech animation that adds a level of polish usually seen in big-budget anime or 3D cutscenes. Imamura notes that the team’s commitment also stemmed from having full voice acting in many scenes: “It felt like a waste not to use that to its fullest.”

Still, Motion Sync requires extra work. It’s more complex than simple volume-based lip syncing, and it relies on Unity for integration, meaning developers can’t see the final result until it’s running on real hardware. As a result, only Emberstoria’s main characters, especially those featured early on, have Motion Sync support for now. But the team plans to expand on this.

Behind Emberstoria’s development lies a somewhat uncommon collaboration between two middleware providers. CRI LipSync, typically used in music and video production, was integrated directly into Live2D’s Cubism engine to support the new Motion Sync feature. Live2D AI engineer Dongchi Lee, who was involved from the research phase, explained that the team needed a system capable of generating mouth shapes directly from voice data, rather than using traditional text-based recognition. According to CRI Middleware’s Issei Hatakeyama, such cross-middleware integrations are “quite unusual,” making this case something of an exception in the industry.

Emberstoria is out in Japan for PC (DMM Games), iOS, and Android.

Live2D Cubism Official Homepage

https://www.live2d.com/

Live2D Cubism Download

https://www.live2d.com/cubism/download/editor/

Emberstoria

https://www.jp.square-enix.com/emberstoria

[Interviewer, editor: Ayuo Kawase]

[Interviewer, writer, editor: Aki Nogishi]