For RGG Studio’s Like a Dragon series, test automation has become an important part of the development process. The goal of test automation is to automate bug detection and streamline the debugging process as much as possible. In recent years, RGG Studio’s efforts have expanded beyond the Like a Dragon series, with test automation also being incorporated into the Super Monkey Ball series. Although they were originally developed for Sega’s in-house Dragon Engine, these automation systems are now being adapted to Unity and Unreal Engine as well.

Who are the team members making this happen, and how do they approach their work? To find out, AUTOMATON spoke with Sega’s quality engineers Naoki Sakaue, Yuto Namiki, and Kazuto Kuwabara, who specialize in finding solutions for quality-related challenges, including test automation, through technology. Be sure to check out part one of this interview too, in which we talk about the mechanisms of test automation and how it has benefited RGG Studio.

─Thanks to your extensive research so far, it seems that test automation at RGG Studio has reached a fairly advanced level, but what have been some recent developments?

Kuwabara:

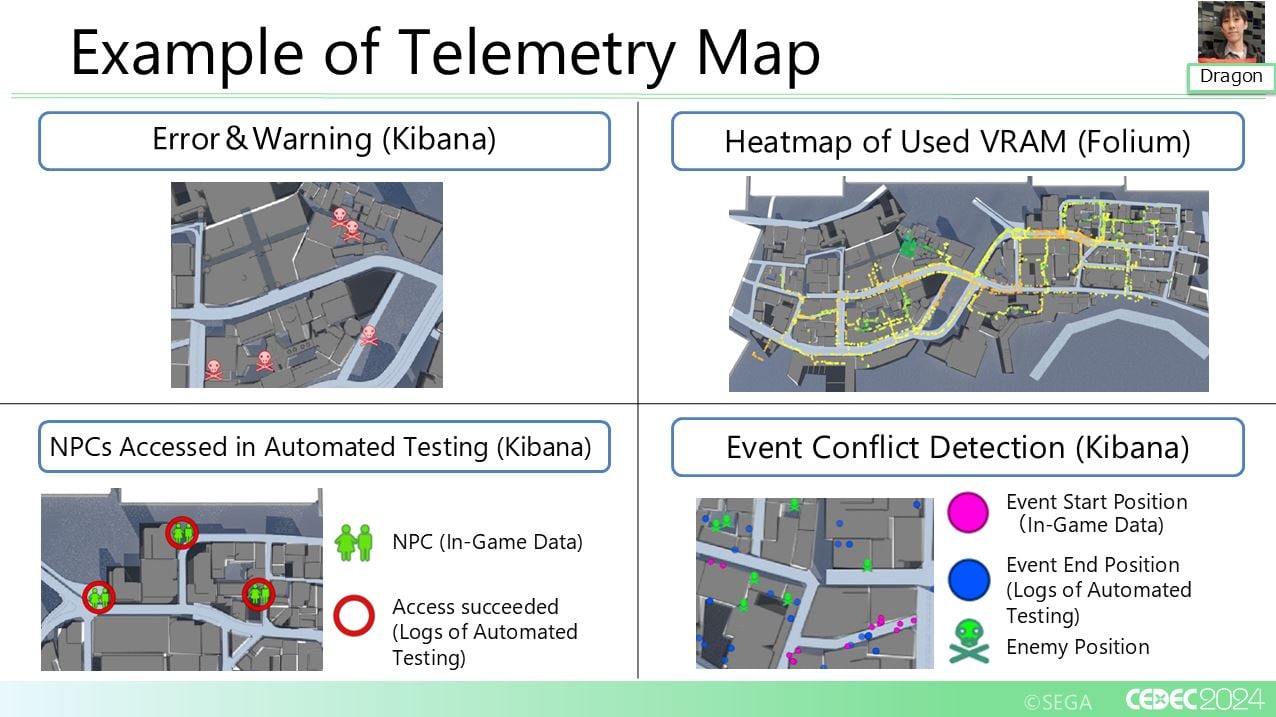

One thing we’ve been working on in particular is enhancing how we check the results of testing. For example, in Infinite Wealth, we focused on improving map-based data visualization. We did so by expanding on a feature we originally implemented in Judgment that allowed us to monitor VRAM (video memory) usage in drone races in the form of a heatmap. Since the drone races take place in the air, the number of objects displayed in-game increases, leading to higher VRAM usage, which can cause slowdowns or crashes. We introduced the heatmap feature to help us quickly identify such cases, and now, we’ve expanded upon it.

For example, we’ve made it possible to visualize various types of data, such as logs, character placement, and even items scattered on the ground. By viewing all of this information from a bird’s-eye perspective, we can more easily pinpoint potential error-prone areas and check errors when they occur. Displaying data on a map allows you to notice at a glance if, for example, there is a high concentration of bugs occurring in a particular area of Hawaii.

─So, the idea is to make potential technical issues visible to you in advance.

Kuwabara:

Yes, by visualizing things like where characters are placed and where in-game events get triggered, we’ve made it easier to address issues caused by the main scenario and substories being too close and thus interfering with each other.

─With large-scale development, it seems crucial to be able to confirm things in the form of objective data too.

Sakaue:

We have two types of this kind of data: one that can be searched in real-time, and another that updates only once a day. The latter is particularly useful, because we can check the VRAM heatmap and go “Okay, no abnormalities today,” or, if we’ve fixed a bug, we can check again the next day to make sure it’s resolved. This ability to collectively track progress and share updates is very helpful.

─Was it mainly Kuwabara-san who was responsible for developing this system that visualizes issues before they occur?

Sakaue:

I created the original prototype, but at first, it only displayed images. It was Kuwabara who expanded upon it, adding features such as the ability to click on elements to view more detailed data.

─I find the data fascinating to look at, even as a mere player.

Everyone:

(laughs)

Kuwabara:

It’s interesting because it’s rare to view game development from such a “meta” perspective, with all sorts of logs and placement data displayed at once.

─Now, tell us about the Super Monkey Ball series. Was it difficult to automate testing for Super Monkey Ball: Banana Rumble?

Namiki:

When we were introducing test automation to Super Monkey Ball, our main task was expanding the foundation of the development environment to support more variations, adapting to the new game engine (Unity) and compatible platforms.

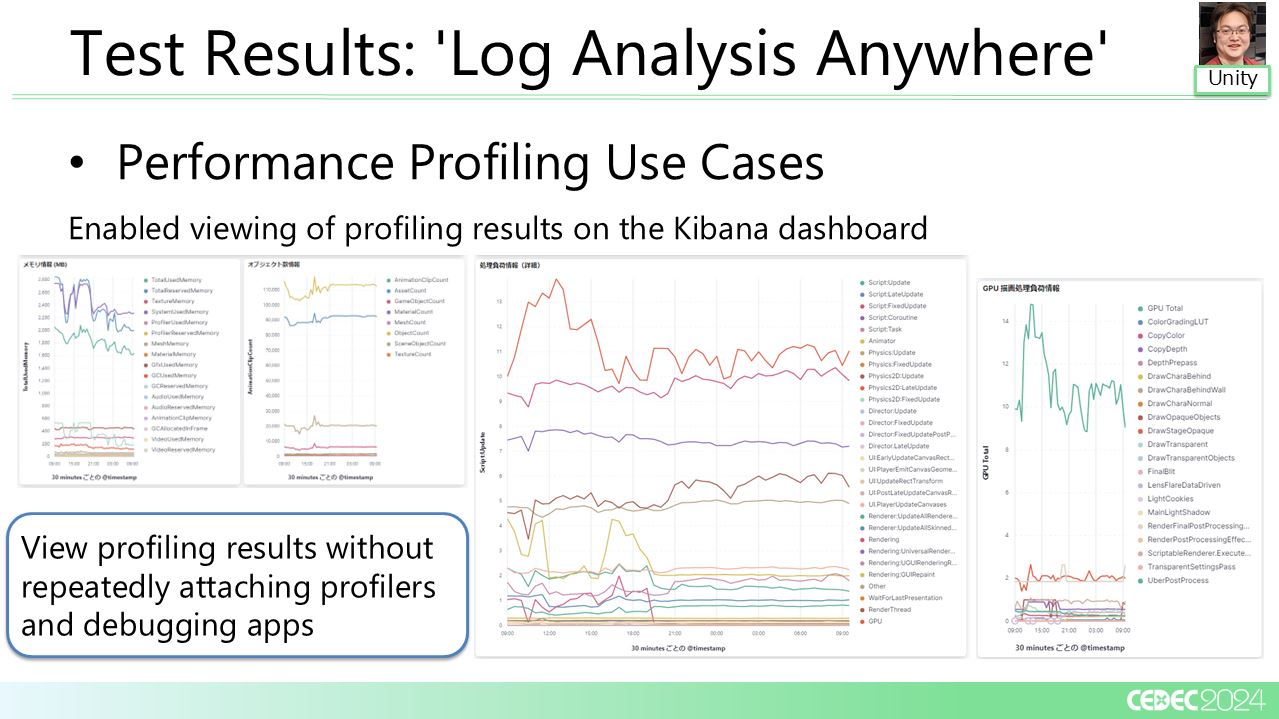

So, out of curiosity, I played around with Unity and discovered its profiling features, which allow data to be output externally through the program. Since we were already able to send test logs to the automation team for visualization, I thought, “Why not use this feature to create visualizations of performance data as well?”

Thanks to this, we were able to visualize information such as “This stage suffers from low frame rate,” “Playing this way causes textures to use more and more memory, leading to memory leaks” or “The models in this scene consume too much memory.” This allowed us to optimize the game smoothly and make it comfortable to play from an early stage.

─So this is essentially a Unity/Super Monkey Ball version of the visualization tool Kuwabara-san created for Like a Dragon, right?

Namiki:

That’s right. Before we had this feature, we had to go through the entire process manually each time – from launching Unity’s editor, starting the game, running tests, checking the results, sharing them, to having everyone review them individually. It was quite tedious, but now, we’ve automated all of this.

The efficiency of our testing has improved so much that we’ve gone from verifying one stage a day to finishing all the stages overnight. Some team members really struggled with manual testing, but after introducing automated testing, the checking process became easier, so I think they were really positive about the introduction of automated testing. Since we developed this tool alongside Infinite Wealth, my next goal is to integrate Kuwabara’s visualization maps from Infinite Wealth into this system as well (laughs).

─So the idea isn’t to just automate tests, but also to identify problem areas in advance to eliminate bugs at their root – which you’ve applied to both titles.

Sakaue:

Yes. While test automation certainly helps detect bugs quickly, it also plays a very important role as a preventive measure.

─It sounds like your advocacy for test automation is spreading among Sega’s engineers. Even so, I find it interesting how Kuwabara and Namiki’s skills are developing in different directions.

Namiki:

In principle, we share the same philosophy, but each person branches out in different areas. In my case, I like developing the foundations, as well as optimizing programs and making them more versatile. So, for this project, I focused on adding support for new platforms and adapting to the new game engine.

Kuwabara:

As for me, I like discovering new functions for tools and figuring out how to put them to practical use, as well as experimenting with various tool combinations. It’s exciting for me because of how new tools can suddenly expand the possibilities of what you’re able to do. I find it really interesting when combining existing and new technologies leads you to entirely new capabilities.

Sakaue:

For quality engineers, I make sure that all team members have equal command over the fundamentals, but beyond that, I encourage each member to develop their own areas of interest. Each team faces different issues and problems – for Super Monkey Ball, it’s automating gameplay for action sequences that’s tricky, while in the Like a Dragon series, it’s the sheer amount of data and variations. Each team faces such challenges, and I let the members identify these problems, interpret them, come up with their own solutions and implement them in their own ways. So each member is able to grow in the areas they’re passionate about.

─So when it comes to solving problems, you don’t give detailed instructions as to how they are supposed to solve them?

Sakaue:

No, I don’t give those kinds of instructions. Instead, I try to support the team so that they can work independently. Test automation didn’t start as something imposed top-down – instead, it was implemented bottom-up, as it was something the workplace wanted. Even now, I consider my role to be supporting what the workplace wants to do. Of course, if something doesn’t meet the required standards, I’ll point it out, but once they’ve mastered the basics, I leave the approach up to them.

─The way you talk about it makes it seem like it’s all fun and games, but I’m guessing that’s only one side of things?

Sakaue:

There are definitely some tough moments too (laughs).

Everyone:

(laughs)

Sakaue:

My job is to solve problems, so in that sense, it’s fun and never gets boring. Even after working on multiple entries in the series, I still encounter issues I’ve never seen before. For Yakuza 6, I was the only quality engineer, so I had to figure out everything on my own, but nowadays, we can discuss and decide on solutions as a team, which makes it a lot more fun.

─I’m sure you’ve encountered all kinds of technical issues by now, but when you come across a problem you’ve never seen before, do you feel anxious? Or do you actually get excited?

Sakaue:

…Excited, I think?

Namiki:

I’d say I feel half-excited and half-anxious?

─So you do get excited! (laughs)

Namiki:

Definitely.

Sakaue:

When a problem occurs, it’s fun to think about how to fix it.

Kuwabara:

There are various potential ways to solve a problem too, so we often discuss which approach is best.

Sakaue:

That said, I think we’re able to enjoy the process thanks to our experience so far. If there were absolutely no clues to how to solve an issue, it would definitely be scary (laughs).

How will test automation evolve in the future?

─As test automation engineers, what goals do you have next?

Kuwabara:

Test automation has made it much easier to detect program-related errors. However, visual- and audio-related errors are still areas that test automation cannot fully address, so that’s something I want to work on next. Recently, AI that can recognize images using LLMs (large language models) has emerged, so we’re at the stage of considering whether we can make use of this technology.

Sakaue:

Every time I check in on Kuwabara’s work, he’s testing some new LLM he’s found, creating fuzzy search functions and the like (laughs).

─What’s your next goal, Namiki-san?

Namiki:

Currently, SEGA’s test automation environment is still divided into three versions: the Dragon Engine, Unreal Engine and Unity version. Because of this, we frequently have to adapt tools built for the Dragon Engine so that they work with Unreal Engine or Unity, or conversely, backport things made for Unreal Engine or Unity to the Dragon Engine. Whenever this happens, it makes me realize that we’re still developing programs separately for each game engine.

A challenge we still face is that we often end up using different methods to implement the same functionalities across different game engines. I would like to unify these approaches and create a test automation system that works consistently across all game engines, using the same tools and mechanisms. If we can standardize everything properly now, this would also allow us to quickly adapt when new game engines emerge in the future.

─So, because the engines are different, you often have to recreate the same things using different methods, which wastes your resources.

Namiki:

While the core systems of each engine may differ, the things that are needed for game development remain the same across all projects. Last year, I gave a talk where I explained how we approached test automation in Unity and Unreal Engine separately. But now, I’m starting to think we could integrate them into a single program.

Currently, each of our development tools is customized to meet the needs of each title. However, I believe it would be much more efficient to consolidate them into a single tool and make them expandable using plugins for each title. By continuously refining these aspects, I would like to create a test automation tool that works in any development environment. Ultimately, I want to do away with the notion that this is solely RGG Studio’s test automation tool and turn it into a fully standardized and general-purpose test automation package.

─If you succeed in standardizing it, it will become even more powerful.

Sakaue:

Up until now, our focus has been on continuously adding features to our test automation framework so that it can run across more environments. We first established the test automation cycle during Yakuza: Like a Dragon’s development, then made it multi-platform compatible during Lost Judgment. The next big step would be making it compatible with multiple game engines, as Namiki mentioned, and achieving standardization.

However, if we pursue standardization in this way, it will inevitably mean having to do away with certain features to maintain broad compatibility. This could result in a generalized system that lacks some of the functions needed for the Like a Dragon series, or where Super Monkey Ball ends up in a state where stages can’t be cleared.

Currently, I’m working on introducing test automation across other Sega titles as part of the Development Technology Division. But dealing with game-specific issues isn’t something a standardized system alone can cover – it requires hands-on support and customization by the quality engineering team. RGG Studio has invested heavily in training its quality engineers, so we have many skilled individuals on board. However, in other departments, we need to introduce test automation while also educating teams about quality engineering and training new talent. My goal is to expand awareness and training to cultivate more skilled engineers like Namiki and Kuwabara.

─So rather than focusing on individual titles, you’re working to expand test automation across all of Sega?

Sakaue:

Ultimately, yes. However, to achieve that, I’m currently working closely with the teams working on individual games to introduce these practices directly. In the Development Technology Division where I’m currently working, there are other members involved in test automation besides me, and we work as a team. While they focus on developing tools for standardization, I mainly go on-site to train the individual development teams. Just handing over the tools isn’t enough – if the teams don’t understand how to interpret errors or write proper tests, they won’t be able to make effective use of the system. That’s why I visit different departments and teams within Sega and actively work together with them.

What is the position of quality engineers within the company?

─By the way, what’s the typical career path to becoming a quality engineer?

Sakaue:

If you want to become a quality engineer, I would strongly recommend gaining experience as a game programmer first. Without a solid understanding of how games are built, test automation can be quite difficult to handle. Most quality engineers start by working in regular game development and then transition into this role after accumulating experience.

─So quality engineering is becoming another viable “next step” in a programmer’s career, alongside roles like director or manager?

Sakaue:

I’m currently trying to make that happen! (laughs) Sparking interest and excitement about the role so that people find it appealing is crucial. That’s why I give my talks on a daily basis to convey the significance of test automation. I also include hard data like numbers in my presentations to make a strong case.

I think that thanks to years of my advocating for test automation, there is a higher level of awareness about it now. And with an increasing number of games being developed in parallel, I feel that there has been a natural push to assign more and more people to test automation.

By the way, I mentioned that programmers often become quality engineers, but recently there has been a trend of QA testers becoming quality engineers as well. I’m quite interested in seeing how their careers evolve.

─It sounds like your grassroots activities have borne fruit. Test automation now seems well-recognized as part of game development, but how was it perceived in the past?

Sakaue:

We’ve always had support. This may be a general trend at SEGA as a company, but I think there’s a strong culture of encouraging people who take on new challenges. If you produce results, people will come to trust you and rely on you for future projects.

During the development of Yakuza 6, we initially ran automated tests overnight using developers’ personal PCs during their idle time. However, this meant that if someone happened to need their PC for something else at night, we couldn’t run critical tests on that machine. At the time, I approached the director about securing machines that would be dedicated to testing, but due to budget- and schedule-related constraints, it didn’t work out.

So instead, we scrapped together spare PCs and used those to prove the system’s effectiveness. By consistently demonstrating the results of our efforts in this way, we gradually built trust and credibility. Now, we have around 150 dedicated testing machines.

─How many people are typically involved in automating tests per title?

Sakaue:

At RGG Studio, we assign one leader per project. We don’t work in large teams with dozens of people, but rather with small but highly skilled teams. However, cooperation within the quality engineering team is very strong, so even when a member is dispatched to a certain project as a full-time leader, they’re still primarily a member of the quality engineering team. If someone is particularly busy during a project’s launch, other team members step in to help out. So, while there’s a dedicated leader for each project, there are always multiple people involved.

─So each engineer is deployed on their own project, but there’s a system in place to share knowledge within the team.

Sakaue:

Yes. If a problem occurs with a tool in one project, the information is shared immediately, and everyone works together to fix it across all projects. Similarly, if Kuwabara or another engineer implements a new function, others will request it and update their programs.

─It almost sounds like some kind of engineering task force – your role seems quite unique.

Sakaue:

Basically, the assigned engineer works as a dedicated member of their project, becoming an accessible contact point for the development team. While other members within the project may also be involved in the automation work, having a go-to person for consultation is crucial. In fact, quality engineers are in such a key position that we’re literally seated in the middle of the workplace. That way, if a director needs a new feature to be made, they can just turn around and ask (laughs).

─I see, it sounds like your role in the project is highly regarded.

Namiki:

Yes, we get consulted on an individual basis very often. Compared to other sections or roles, I’d say that our work is seen as kind of unique and valuable.

─Given the nature of your work, I’m guessing communication skills are also important, aren’t they?

Sakaue:

Yes, I think being approachable and easy to consult with is important. Since we’re required to keep a “bird’s-eye view” over the project’s overall health, it’s important that people feel comfortable confiding in us when, for example, they find that a certain feature is hard to use. Of course, we may not be able to respond to each demand within the project’s timeframe, but we always conduct a review at the end of each project, identifying the things we weren’t able to address and implementing improvements needed for future use.

Kuwabara:

Even while I was involved in regular development as a programmer at RGG Studio, I really felt the importance of connecting with various people involved in the project.

─Yutaka Ito (RGG Studio’s Technical Director) also previously told us that he teaches his programmers to be easily approachable. I see that this culture has taken root.

Training quality engineers

Namiki:

There are two phases in the work of a quality engineer. The first phase focuses on preparing the necessary functions for game development, while the second phase involves assembling the completed components, setting up the development and testing environments, and getting started with actual operation.

In the first phase, in order to accommodate the game’s new elements, we identify the necessary features and create them. For example, in Infinite Wealth, this was the new Hawaii map, while for Super Monkey Ball, it was the new physics engine. We needed to put together new mechanisms to support their implementation and testing, while at the same time establishing the tools and server workflows for development as early as possible.

During the second phase, the test automation cycle gets set in place, and actual testing begins. This is when our focus shifts to communication with other parties and keeping an eye on progress. In RGG Studio’s projects, QA testers are also involved in writing automated tests, so our main task is to ensure that the test automation cycle runs smoothly by teaching the development team how to run tests and investigate errors.

Sakaue:

I think it can be roughly divided into three phases depending on the project’s timeline. First, there’s an initial preparation phase that starts as soon as we get a hint that a project might be launching. This is when we focus on setting up the development and testing environments.

From the middle phase, we begin coordinating with key members of the project to determine what features are necessary. This includes not only the developers, but also members responsible for testing and localization. For instance, when it comes to localization, the people involved often work in our overseas offices, meaning we have to account for time differences. It’s not uncommon for us to have a meeting with the US team in the morning, followed by a meeting with the UK team in the evening.

In the final phase, our main task is to identify and reduce inefficiencies in the development and testing workflows, collect and analyze errors, and prioritize and report the critical ones. This stage is all about monitoring the process and constantly making improvements.

─I see, the nature of your work changes quite drastically depending on the phase.

Kuwabara:

I basically follow a similar cycle. In the “middle phase,” I look for concentrations of errors, create maps using log analysis, and report on the error-prone areas.

─Do you have a favorite part of the process?

Sakaue:

I quite enjoy building systems.

Namiki:

It’s the same for me. I enjoy watching over the system once it’s built and in operation.

─I’m guessing it feels like raising a child for you.

Sakaue:

I also enjoy the latter phase of monitoring the tests. On days when I see that the automated tests are running at a 100% success rate, I find myself grinning as I watch over them (laughs). On the other hand, when the success rate is 0%, that means I have a lot of work to do, and things can get really hectic – these are usually the days when I keep encountering additional problems one after the other, so I’ll often be sighing to myself.

Kuwabara:

I find the task of adapting the test automation environment to new minigames challenging and fun.

─Has anything about your situation changed since your “Fully Automated Bug Tracking System” presentation won the Grand Prize in the Engineering Category at CEDEC 2021?*

*CEDEC (Computer Entertainment Developers Conference) is Japan’s largest conference for computer entertainment developers to share video game technology and knowledge.

Sakaue:

Thankfully, since receiving the award, we have become more well-known within the company, and I feel that our recognition within the industry has also increased significantly. I think that we have been able to properly communicate the importance of having dedicated engineers to automate testing. At conferences such as CEDEC, the number of lectures on test automation has increased significantly compared to about two years ago, so I feel that this has had a big impact.

─Have other people in the QA industry started consulting with you more?

Sakaue:

I mentioned earlier that I used to be quite isolated in the past. Similarly, the people working on test automation at other companies often do it in small groups as well. At CEDEC, we started consulting with each other, and the Q&A corner, where you can talk to the audience after the lecture, turned into a full-on consulting session (laughs).

Namiki:

Since everyone there was very familiar with the field of test automation, the Q&A session really turned into a specialized consultation.

Sakaue:

We even recommended automation solutions to each other based on the kind of games we were working on.

Namiki:

Things got really heated, and we ended up exceeding the Q&A time limit and getting told off by a staff member (laughs).

Sakaue:

We went on talking for about an hour (laughs).

─So, a community was formed from there.

Sakaue:

Since last year, I’ve become personally involved in CEDEC, not only as a presenter, but also as an organizer. I would like to play a part in creating a community where people can share technical information with each other. Also, I’ve been receiving many inquiries about training quality engineers, which is another challenge I’d like to tackle. RGG Studio has a large number of skilled human resources because its quick release cycle makes it easy to gain experience, but this isn’t the case with other titles. I would like to offer some help in this respect.

─I feel like Sakaue-san’s goal of creating a community has been realized to a fair degree. Do you have some ambitions of your own (to Namiki and Kuwabara)?

Kuwabara:

Since I originally studied artificial intelligence in college, I would like to integrate AI into test automation. I want to develop an AI system that can assist development, like a so-called AI agent. It could, for example, run tests overnight when instructed, provide explanations for errors, or even suggest solutions. Additionally, I’d like to use AI to lighten the burden of menial tasks, such as object placement, and basically have it act as a copy of myself.

─ I feel like AI assistants are close to becoming fully practical.

Kuwabara:

When it comes to coding, there are already plenty of systems out there that can generate programs automatically. However, general-purpose models like OpenAI’s don’t possess knowledge of coding for the Dragon Engine, so they’re not something we can apply as-is. That’s why I’d like to develop a model that has been specifically trained to write code for the Dragon Engine.

Namiki:

Thanks to our various activities, such as the CEDEC presentations, we’ve been getting more and more inquiries from young engineers both inside and outside the company who are interested in the work of quality engineers. This has led me to believe that the field of quality engineering will see an increase in young talent in the near future, so I would like to develop tools that can be used in a wider range of environments and make test automation more accessible for them.

This is part of why I keep emphasizing standardization, optimization, and the need to eliminate engine-specific quirks. I want to create a foundation that can easily be introduced to new environments and which young engineers can freely build upon with new things. Ideally, I would like to make it so that even a rookie can easily automatically test a simple program they made during their training – that’s the level of accessibility I would like to work on achieving.

─So, you’re aiming to improve accessibility in multiple respects and make the complex technology easier to use for a wider range of users.

Sakaue:

Yes, I’m conscious of getting rid of dependency on specific individuals in order to use technology, or the so-called “de-personalization” of technology. The reason I started sharing my knowledge in the first place was that, back when I was the only one handling quality engineering, I wanted to spread that expertise to other teams within the company. As I started taking on multiple projects at once, I realized that it was no longer feasible for me to handle everything on my own. This made it clear to me that every project needed at least one person capable of handling test automation. If I had continued to manage everything by myself, the number of quality engineers wouldn’t have increased this much, and we might not have progressed as far as we have.

Instead, the number of team members increased, broadening our ability to deal with issues. And even in my talks, I don’t just share information, but also receive a ton of valuable feedback and new ideas. Of course, I want people to make full use of the information I provide, but there is also an aspect of it being a “return of profit” to me. In technology development, there are just some things you can’t accomplish alone. For example, the idea of writing automated test scripts that can clear a game actually came from someone who attended one of my presentations, and the suggestion to make those scripts work in Python came from Kuwabara.

─You want to see technology develop more and more through collective intelligence.

Sakaue:

Of course, when it comes to the core aspects of game development, a lot of things are difficult for a company to share publicly. However, quality assurance, including test automation, is something that everyone struggles with, and it’s a relatively easy field for exchanging information. By putting our knowledge out there, I think we’re making it easier for other companies to do the same, thus creating a positive cycle. At the same time, it’s also a field where we can’t afford to fall behind, so we’ll continue to work hard in the future.

─We’re looking forward to your future endeavors! Thank you for your time.

[Writer, editor: Daijiro Akiyama]

[Interviewer, editor: Ayuo Kawase]