SEGA’s Like a Dragon series, developed by RGG Studio, is known for its expansive games and rapid release schedule. To maintain this scale and speed of development, efficient testing and debugging are a must. In many game development cycles, testing and debugging typically take place during the final stages, after or right before all the game’s content has been made. However, at RGG Studio, these processes begin early on in development and run in parallel with game production. This approach allows the team to keep up their rapid pace without compromising quality.

How is this possible? The key lies in the studio’s extensive use of test automation, a technology refined over the years at SEGA. AUTOMATON spoke with SEGA’s quality engineers Naoki Sakaue, Yuto Namiki, and Kazuto Kuwabara, who specialize in test automation and quality assurance, to get a better idea of their approach to automated testing.

─Please introduce yourselves.

Yuto Namiki (hereafter Namiki):

I’m Namiki from Sega’s 1st Development Division. I joined the company in 2016, so it’s my ninth year here. I’ve been a part of RGG Studio ever since, and I was a programmer for Yakuza 6: The Song of Life. After gaining experience in programming various gameplay systems, I was assigned to the Super Monkey Ball series as a UI and localization programmer. For the latest title in the series, Super Monkey Ball Banana Rumble, I joined the quality engineering team.

Kazuto Kuwabara (hereafter Kuwabara):

I’m Kuwabara from Sega’s 1st Development Division. I’ve been with the company for seven years, since 2018. Like Namiki, I initially joined as a game programmer, and I was involved in the development of Yakuza: Like a Dragon and Lost Judgment, mostly overseeing the adventure parts and minigames. Since then, I’ve gradually become involved in quality engineering tasks, becoming the quality engineering team leader for Like a Dragon: Infinite Wealth.

Naoki Sakaue (hereafter Sakaue):

I’m Sakaue, part of the Development Technology Division that provides technical support for SEGA Group. I joined the company back in 2004, so I’ve been here for twenty years already (laughs). At the start, I was also a game programmer, working on the Let’s Make a Soccer Team! series and other projects. I’ve been involved in the Like a Dragon series’ development since Yakuza 3. Initially, I wasn’t involved in test automation, but things like stage rendering and researching in-game day and night transitions. The research I handed down became the foundation for the seamless time progression implemented in Infinite Wealth’s Dondoko Island.

Now, I’m focusing on the implementation of automated testing in all SEGA titles as part of the Development Technology Division.

What is test automation?

─Let’s start from the basics: What is test automation?

Sakaue:

This might get a bit long, but allow me to explain. In game development, we have two processes called testing and debugging. Testing involves creating a plan of what to test in a game based on its specifications, and testing (playing) the game based on that plan to discover bugs. The bugs are reported and then verified after they’re fixed. What I‘ve described so far is the job of a QA tester.

Debugging, on the other hand, involves investigating the causes of discovered bugs and fixing them. This is the job of the development team, primarily the programmers.

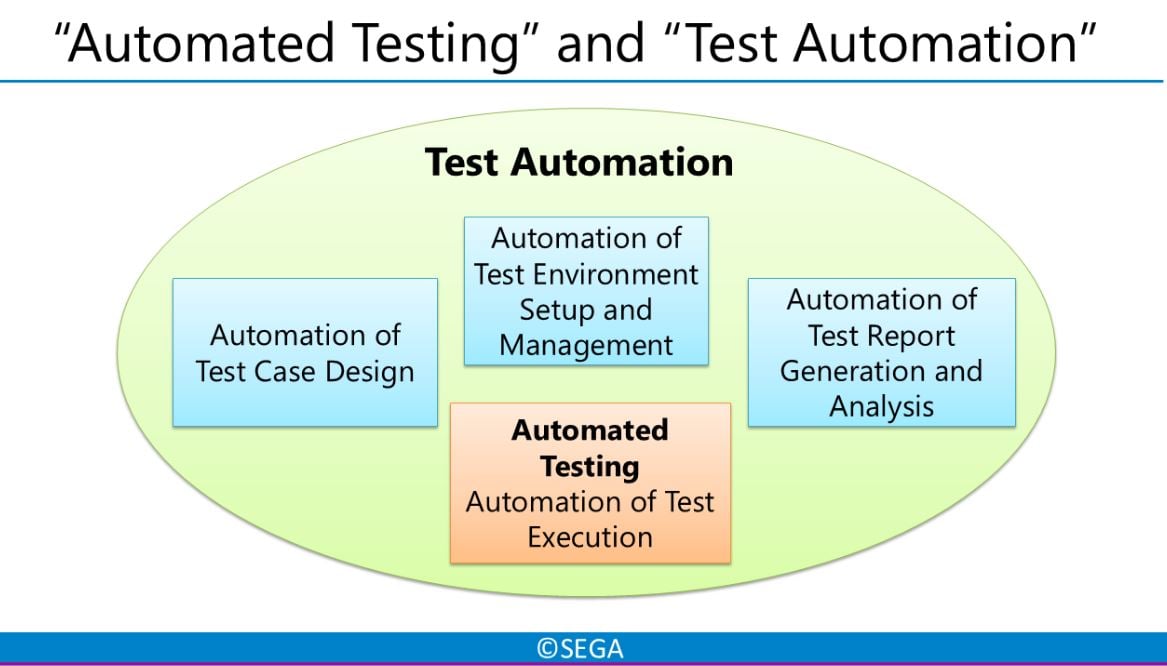

That brings us to test automation – this is the process of automating tasks related primarily to the “testing” process I just described. The image below might give you a good idea of what I’m talking about.

Moreover, at RGG Studio, we go beyond the scope of automated testing and automate the entire development environment. This includes automated packaging*, which we do from the early stages of development onwards.

*Packaging in game development refers to the process of compiling all data required to run the game.

Going a step further from automated packaging, we’ve also automated the process of setting up the testing environment and installing packages needed for testing. Automated testing itself tends to be what people focus on, but we also automate the process of setting up a testing plan, as this is actually the more demanding part of the testing process. Although, in our lectures, people are always the most impressed when they see the game being played automatically (laughs).

─That reminds me, you gave a rather memorable lecture at CEDEC 2020 (via Famitsu), where you introduced an almost fully automated bug tracking system. Since when have you been researching automated testing?

Sakaue:

Since quite a long time ago. At first, we only dabbled in automated packaging and automated error detection, but we made the tools we needed to go further during the development of Yakuza 6, when we started automating the analysis of in-game logs and the issue tracking system for keeping track of bugs and tasks. Then, by the time Yakuza: Like a Dragon was released in 2020, we created the catchy sounding “fully automated bug detection system” (laughs).

This is how it works – the history of actions you performed when playing the game manually (where you travelled, who you talked to, what items you used, etc.) is converted into commands and recorded, then automatically output as replay data (scripts) which you can edit manually and run as automated tests. Replay data continues to be recorded when running automated tests, and if a bug occurs during an automated test, the replay data gets saved, so you can run it back later to encounter the bug yourself. It often happens that you can’t reproduce a bug just by warping to its coordinates. This is because you also need to recreate the steps leading up to it – that’s why it’s important to record each step.

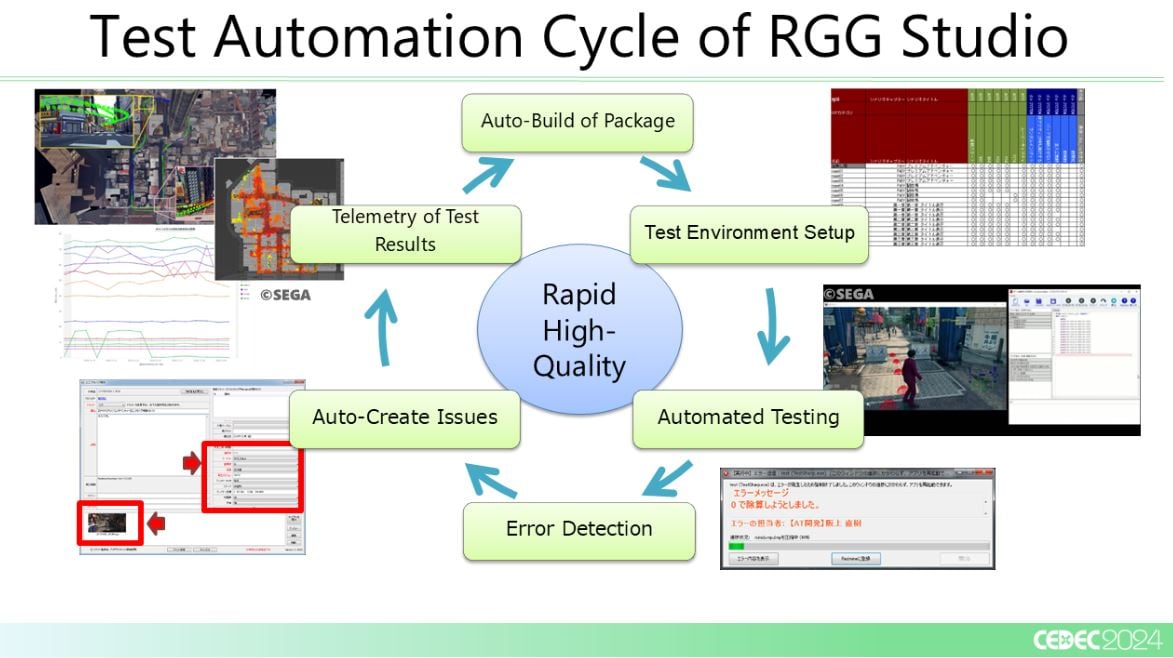

Also, I’d like to mention that just implementing automated testing doesn’t mean much on its own, because you won’t know what the results of the tests are. That’s why we needed a crash report function to detect bugs. There’s also a function that records information needed to investigate detected bugs, as well as a way to check the status of successful tests. Then, by implementing a system that gives us a visualization of performance, we were able to make iteration* more efficient, increasing the overall efficiency of the development process.

*Iteration in software development refers to performing repetitions of a specific test over a short period of time.

How does test automation help?

─How much has introducing test automation benefitted you?

Sakaue:

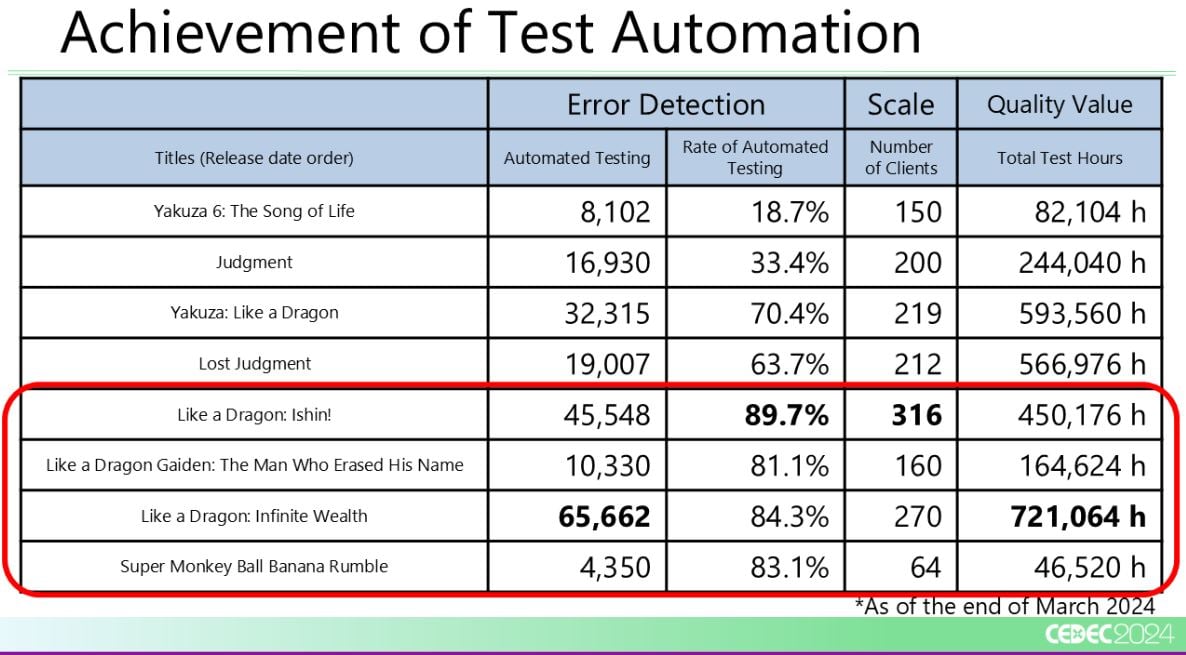

The benefits have been quite obvious. When I give lectures, I always illustrate using numbers (laughs). For example, the number of errors we detected when developing Yakuza 0 was fewer than 1,000. Since then, we’ve made various improvements to the program and have become able to perform a wider variety of tests, which led us to discovering around 60,000 errors in Infinite Wealth. Most importantly, the percentage of errors found though automated testing has been steadily increasing the whole time, and is now at over 80%.

The total running time for tests has also been gradually increasing – for Infinite Wealth, we ran full tests of the main scenario and substories, as well as tests of the mini games every day, in each language and on every platform – resulting in a total of about 700,000 hours of testing (performed during the night). We have more and more machines running automated tests 24 hours a day now, and it’s getting to the point where machines that started running tests in the morning are already showing errors by the afternoon.

The time it took us to create the automated tests and implement the automated testing system for Infinite Wealth was about 5,000 hours. This is less than one-hundredth of the total 700,000 hours of running time I just mentioned. This means that each person on the team is doing the work of more than 100 people (laughs).

By putting it like that, I might have given you the impression that we were able to achieve a huge cost cut, but I’d like to emphasize that this was only possible thanks to our accumulated efforts to improve the system over time – it’s not a level of efficiency we could have hoped for right from the start.

For Super Monkey Ball Banana Rumble, it was our first time implementing automated testing in the series’ history, so the efficiency was only about 10 times higher than that of human labor alone, but even that was a lot higher than what we’d anticipated. These numbers were possible because we were able to reuse mechanisms developed for the Like a Dragon series. I think that when you introduce automated testing for the first time, the initial benefit is a lot more noticeable in terms of quality than it is in terms of cost efficiency.

─It seems like a major reduction in man-hours overall.

Sakaue:

Also, people usually associate testing with the late stages of development. However, at RGG Studio, we use our experience and systems from previous games to start testing not in the beta phase, but as early as the alpha and prototype phases.

─Wait, so you do testing in parallel with development from the start?

Sakaue:

Basically, as soon as development starts, we start preparation for testing.

─That’s surprising. Does that mean you don’t have a dedicated testing period at RGG Studio?

Sakaue:

Of course, there is a period of testing and debugging for final, manual verification during the end stages of development. However, we don’t want to have any fatal bugs left over at this point, so we deal with them in advance. We want to fix any obvious bugs – like bugs that cause the application to crash when you go to certain coordinates or bugs related to missing collision detection – as soon as possible. Everyone remembers the details of a bug that occurred last night, but if a bug gets reported a month after it occurred, we may have lost track of what it is or even worse, the bug may have led to another bug in the meantime. As such, it’s a lot less costly to just fix things early on.

─True, it makes sense to want to iron out bugs as early on as possible.

Namiki:

Before we introduced automated testing, it used to take longer for us to get to debugging after a bug’s initial discovery. Then when it came the time to debug, we’d have to dedicate extra time to investigate the conditions for its occurrence, which would slow down the pace of development. Even if we wanted to concentrate on other tasks, we’d get interrupted by bug investigations and fixes.

After introducing automated testing, we created a cycle whereby information on bugs gets sent in batches. This allowed us to fix bugs found overnight by noon the next day, generate a new package automatically by night, and have the fix ready by the following morning. This has allowed us to develop games at a completely different speed than before. Since the bugs get fixed faster, we have more time on our hands to devote to other areas of development. Seeing games come together at such a rapid pace really drove home to us how amazing test automation is.

Kuwabara:

Letting even a single fatal bug remain in the game in the final stages of development is bad news. By using automated testing, we can guarantee that the main story and all substories have been cleared every day, and that the mini games are functioning properly too, which gives us a lot of reassurance. In turn, we get more time to improve the game and add new features to it.

Sakaue:

One more advantage of test automation is that we can share the results with our teams that do manual testing and localization testing. For example, a common issue we get is that a package won’t run in a specific language. But now, the other teams can consult with the automated test results and decide what package to use for their own testing based on that, which I think is really useful.

Due to time differences, we can’t always communicate in real time with staff working overseas, so we used to have incidents where information was not communicated properly, leading to them selecting non-functional packages. However, automated testing has solved this problem for us. The communication involved in dealing with bugs is time-consuming, and due to it being daytime in Japan and nighttime overseas- it also led to unhealthy working habits, so it wasn’t something we could just ignore. I’m really glad we’ve managed to resolve this issue.

Test automation helped us with efficiency, but that’s not all

─What have been some common bugs occurring in the Like a Dragon series?

Sakaue:

Each title is prone to specific bugs, so it’s important to identify what kinds of bugs are likely to occur in a game and prepare the necessary testing to intercept them. For the Like a Dragon series, the trickiest part is the connection between different elements of the game. The games are generally structured around a main story, with substories and minigames scattered throughout. With this kind of structure, it’s common for errors to occur where the minigames connect with the main story.

For example – you finish playing a minigame, and you get warped to strange coordinates and the game crashes. When a bug like that arises, it has to be fixed before development of the main story can resume. That’s why it’s very important to check progression of the main scenario all the way through in one go for Yakuza games — not just from start to finish, but as a continuous experience. Infinite Wealth included essential side content like Dondoko Island and Crazy Delivery, so it was crucial to make sure these sections didn’t interfere with each other.

─I can imagine how difficult testing Like a Dragon games must be, given how NPCs change places with in-game time progression and events.

Sakaue:

Problems can easily arise when characters from the main scenario are located close to characters from a substory, so we thoroughly check such places. From Yakuza 6 onwards, we’ve implemented a system that clears the game automatically. Before that, every time we’d submit a package to the manual testing team, the game had to be tested by hand from start to finish in a continuous run, so we’d assign one person for each chapter and go through it manually. But now that we can do this automatically, we’ve been freed from this process (laughs). Although we still have to do final verification manually, getting rid of the repetitive checking process was a huge achievement for us.

─Are there any difficulties particular to the Super Monkey Ball series?

Namiki:

Compared to the Like a Dragon series, the Super Monkey Ball series has simpler gameplay and is more compact, plus the physics and behavior of the ball have been refined over many years. That’s why we focus less on the core of the program and more on checking the stage and environment graphics, as well as the functionality of new gimmicks.

There is one important thing I’d like to mention though… the Super Monkey Ball series is challenging in terms of skill. If you assign different people to different stages of the game, the people in charge of the later levels have a really hard time (laughs). We worked on Super Monkey Ball Banana Mania before introducing automated testing, and there were only one or two people on the development team who could clear all of the levels. As a result, we had to basically rely solely on them and the QA testers for the later stages of the game. We knew we couldn’t remain dependent on human labor for this, which was a big factor that led to us introducing automated testing.

─It’s unheard of, but if the game were released without proper testing and it turned out that the difficult stages had bugs, that’d be a problem.

Namiki:

Exactly. By the way, the approach to test automation is different for the Yakuza series and Super Monkey Ball series. Like a Dragon games allow you to defeat enemies even by using random inputs to a certain extent, which is a valid way to test. But if you try to do random inputs in a Super Monkey Ball game, you just end up falling off the stage immediately, so there’s no way you can test with random inputs (laughs). That’s why we developed AI-controlled commands to test Super Monkey Ball games.

In the latest game, Super Monkey Ball Banana Rumble, we implemented an online multiplayer mode for up to 16 players and multiple new game rules and items, which made testing a real challenge. The most daunting part was manually testing the competitive online mode with all 16 people. We had to wait for moments when people on the team had extra time on their hands, but the best we could manage was about two times a week. These kinds of tests have become a lot more manageable with the help of automated testing.

─So, the Like a Dragon series complicates things with its variety of game content and sheer volume, while the Super Monkey Ball is tricky because of its high difficulty.

Namiki:

The automated test certainly plays Super Monkey Ball way better than I do… (laughs).

Everyone:

(laughs)

─I’m curious about the history leading up to the practical implementation of test automation. How was testing conducted before the fully automated testing system was completed?

Sakaue:

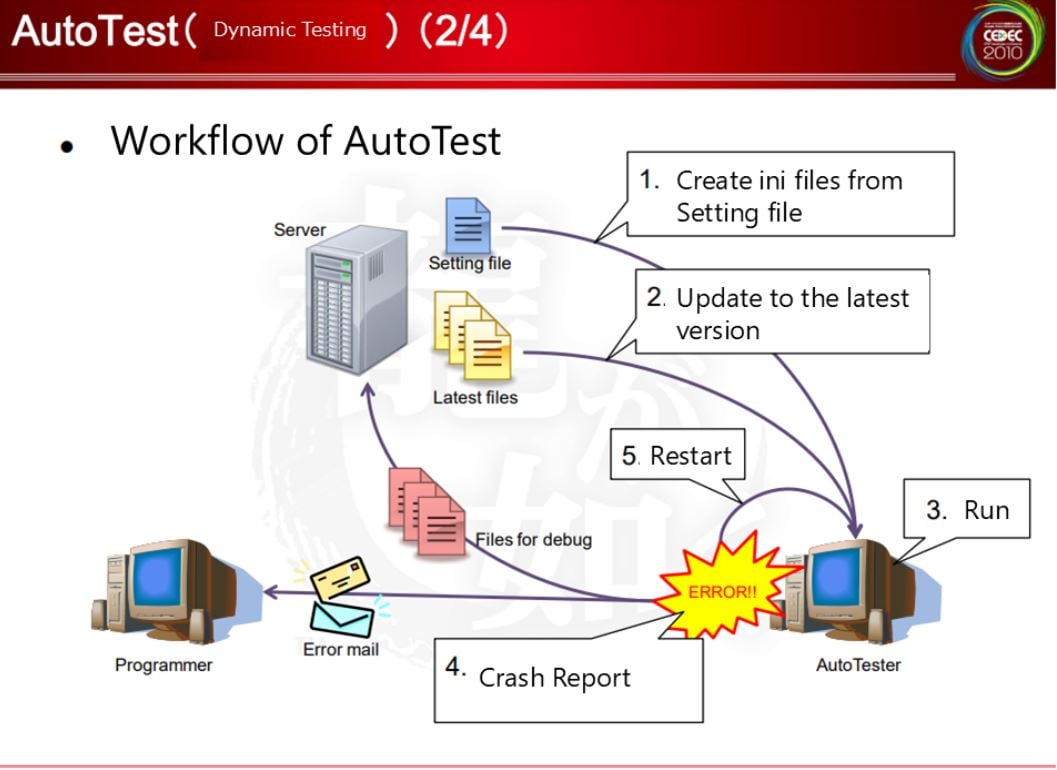

When I joined RGG Studio (at the time of Yakuza 3’s development) we already had a prototype in place for automated testing. Originally, as explained in the CEDEC 2010 presentation, the lead programmer back then had developed a prototype as a side project in his spare time, and that served as the basis for what would become the automated testing system.

This was applied in Yakuza 4. Up until Yakuza 3, there wasn’t a cycle yet for automatically setting up the test environment, but we did have a system for automated gameplay. Before leaving for the day, everyone would set up their PC to launch the game and configure it to repeatedly perform random inputs in Premium Adventure mode. Then, when we arrived the next morning, we would check for bugs and the programmers would discuss the bugs with each other as they fixed them. You could say we were doing half-automated testing.

Then, for Yakuza 4, we systematized this process. Since it was now possible to start running automated testing with the push of a single button, it became our culture to always start up testing before leaving the office for the day.

─So you had the idea for test automation a long time ago.

Sakaue:

Actually, the foundations of automated testing lie in arcade game development. If you trace the origins of the Like a Dragon development team, you’ll find that quite a few of us originally worked on arcade games. Before you put in a coin and start playing an arcade game, the machine plays a demo clip, doesn’t it? That’s not just a movie, but actual footage of the game in action. Many of us have experienced making these, so when we transitioned to console game development, the idea of trying to automate things came to us naturally.

─That’s a very interesting connection.

Sakaue:

I must note, however, that since arcade games and console games differ in required playtime and the aspects that need testing, simply running random inputs wasn’t enough to cover everything. To address this, we gradually refined the system by adding features that allowed us to set specific conditions and test parameters, shaping it into what it is today.

─So this was something that was gradually built up with you at the center of things, Sakaue-san. At what point did automated testing become more packaged?

Sakaue:

It really started to take shape when I took over the prototype that the lead programmer had been working on as a side project from Yakuza 4 and became the dedicated person in charge of test automation. The title where our efforts truly became serious was actually Binary Domain (a TPS developed by RGG Studio).

In our CEDEC lectures and other presentations, we primarily showcase examples from the Like a Dragon series, so that’s probably the strongest association people have. However, for me personally, Binary Domain was where test automation truly began in earnest, so it’s stayed with me as a memorable title.

Why did you decide to become a quality engineer?

─Sakaue-san has already given numerous lectures on automated testing and is well-known in the industry, but why did you, as programmers, decide to go down the path of quality engineers? (to Kuwabara and Namiki)

Kuwabara:

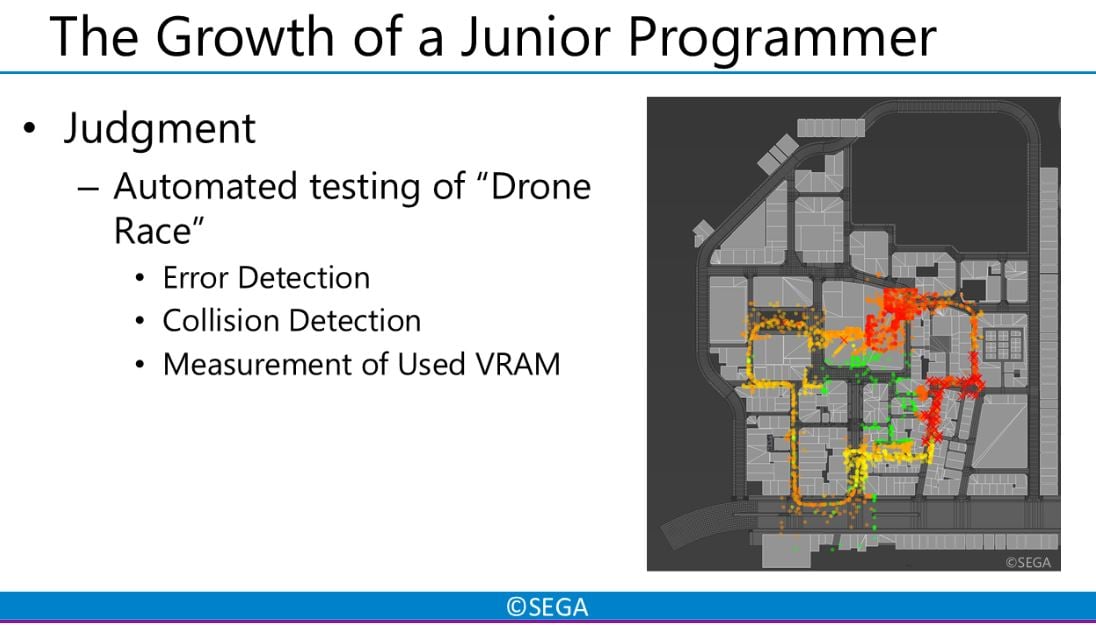

During my time as a programmer for Judgment, I was assigned to creating scripts and developing an automated program for the drone races. Amidst those tasks, I helped out with test automation. I found it fascinating how the system could automatically play through the scenario and content to detect bugs, which sparked my interest in the field.

Later, during the development of Yakuza: Like a Dragon and Lost Judgment, I worked on log analysis and automated testing for my own minigames. After actively expressing my interest in quality engineering, I was eventually appointed as the leader of the quality engineering team for Infinite Wealth.

─Now that you mention it, the drone racing in Judgment and Lost Judgment seems like it would be tricky in terms of collision detection and bugs.

Sakaue:

I actually have some documentation from back then. This is a visualization of memory usage during the drone races. Since drones allow players to view the map from high up in the air, memory load can get quite high. Back when Kuwabara was a newcomer, he worked on visualizing this kind of data.

─I remember being naughty when playing Lost Judgment and checking if I could phase through any of the walls, but I couldn’t, which left me impressed with how thorough the collision detection was.

Sakaue:

(laughs) Yeah, we put a lot of time into the drone races. We used dozens of machines to run collision

tests, making sure the drone interacted properly with all of the objects, including walls. Even things like AC condenser units had proper collision detection, so we had to test crashes from all sorts of angles using automated testing.

─Why did you decide to become a quality engineer, Namiki-san?

Namiki:

I joined the quality engineering team about two years ago. Back then, I was working as a programmer on Super Monkey Ball Banana Mania, and around the time the project wrapped up, Sakaue-san reached out to me. I thought it would be interesting to try implementing the Like a Dragon series’ automated testing system into Super Monkey Ball’s development environment, so I joined the team eager to take on the challenge. That’s how I got to where I am now.

A typical programmer’s job mainly revolves around creating in-game mechanics and gameplay elements. However, when it comes to test automation you also have to develop tools that work across different consoles, development kits, and PCs. On top of that, you need to handle data exchange within the team, set up and maintain systems for distributing information to development servers, and more. At first, it was a completely unknown world to me. (laughs) It was like I was suddenly fighting in an entirely different field.

Even as I was scrambling to keep up, I kept asking people around me for advice and studying in parallel with my work. It was tough, but as I got deeper into it, I found myself getting hooked. Eventually, with Super Monkey Ball Banana Rumble, we were able to implement an automated testing tool that gave us palpable results.

─That sounds like a lot of work. So, as part of the automated testing team, you need to handle everything from creating the tools, implementing them, and then verifying that they work properly.

Namiki:

Yes, I used to be on the side that uses the tools, but when I first came to the side that makes them, I was like “How do you even go about making this?” (laughs) But personally, I’m the type of person who enjoys tackling new problems and searching for solutions, so before I knew it, I got completely sucked into the world of test automation. Now, I’m an active part of the team.

Sakaue:

Namiki is the kind of person who likes to explore how game engines work and debugging methods, which makes him well-suited for the field of test automation. For example, he worked on setting up a system to analyze what causes memory shortages and made debugging easier.

Namiki:

Back when I was working on Super Monkey Ball Banana Mania, I was in charge of localization-related programming, and I was already creating custom systems to run on Unity. As a result, I was fairly confident in my knowledge of programming at the engine-level. However, even with that experience, implementing automated testing for Super Monkey Ball was still quite tough (laughs).

The system had originally been built around the Like a Dragon series, which meant it was designed for Dragon Engine-based, high-spec console development, like the PlayStation. But for Super Monkey Ball, we needed a system that worked with Unity and targeted the Nintendo Switch, a more casual gaming platform. On top of that, the Yakuza games are offline, story-driven games, while Super Monkey Ball is an online multiplayer battle royale – the genres couldn’t be more different (laughs)! That’s why we had to blend the expertise of both teams to make it work somehow.

─It sounds like it required a lot of expertise.

Sakaue:

In practice, having broad and diverse knowledge tends to be more important than just being highly specialized.

─How is your work perceived by the other departments and teams?

Sakaue:

It’s well received by the developers. I think that’s because it’s not something that only benefits engineers like us, who specialize in test automation – it can also be used by various other people. For example, if a programmer in charge of a minigame wants to test their work, they can set up an automated test on their own machine. That way, they can immediately tell if their minigame isn’t functioning properly. The fact that it’s genuinely helpful leads to greater understanding and support from the team.

Kuwabara also automated the testing for his minigame, ran it overnight, and used the bug reports he found in the morning to fix issues during the day. Another big factor is that all programmers go through automated testing-related training during their onboarding, so they know how to use it.

─How does it feel to come into work in the morning and find that an overnight test discovered bugs in your code?

Namiki:

It makes me happy, in a way. Although, it’s only after the bugs have been fixed and checked that I can be at peace. Until then, they’re just bug reports.

Kuwabara:

I don’t mind it either, but when I get a bunch of error reports all at once, it can be overwhelming (laughs).

Everyone:

(Laughs)

─I guess that just means more work for you (laughs).

Kuwabara:

For Yakuza: Like a Dragon, I programmed and automated the testing for the Can Quest minigame. I remember coming in one morning and finding that the system had caught some weird bugs. Thanks to that, we were able to improve the response time for bug detection and fixes, so it was a huge help.

Sakaue:

We actually have documentation on that too. This minigame is tied to the main story, meaning you can’t progress in the game unless you clear the tutorial. That made automated testing absolutely essential.

─Right, I guess you need to be all the more thorough with checking unskippable content.

Sakaue:

When testing minigames, there’s a tendency to focus only on whether the game logic is working correctly or not. But actually, you need to test everything from walking up to the minigame’s location, playing it, and returning to the main scenario. Automated testing helps cover all those aspects.

Kuwabara:

With automated testing, we sometimes find rare bugs that likely wouldn’t appear in standard gameplay, which is another major advantage.

─So it tests situations that a human player would never intentionally try.

Kuwabara:

If we were only testing it manually, we’d have to run the game over and over again to catch these issues. But by automating the process, you can take advantage of both the volume and specificity of tests.

Thank you for your time!

In the second part of this interview, we talk to the RGG Studio team about how they go about preventing bugs from occurring in the first place.