Publication date of the original Japanese article: 2022-05-10 12:00 (JST)

Translated by. Braden Noyes

Many gamers often forget the integral part that sound plays in bringing video games to life. Each and every sound that can be heard within a game is controlled by a complex set of processes, and developers like Square Enix pay special attention to making sure these sounds make for the best gameplay experience possible. One of the technologies that has aided Square Enix in creating their game audio over the years is the HCA codec, audio technology that has been developed and maintained over the past 20 years by CRI Middleware.

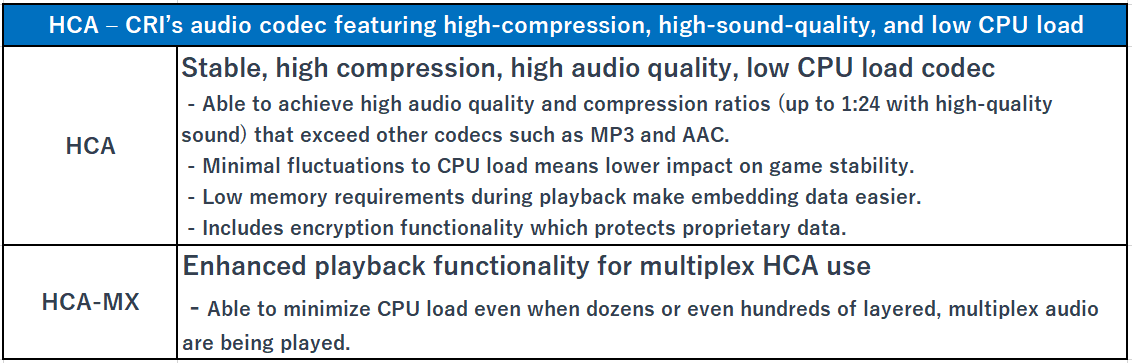

The HCA codec, or High Compression Audio codec, is CRI Middleware’s proprietary compression-style audio codec. It is widely known for its high compression rates, giving it the ability to reduce large amounts of sound data into much smaller files. These files are able to then be played back with minimal load on a game system’s processors, and the codec manages to accomplish all this while maintaining a high sound quality. The codec is widely used among games for many different platforms, including consoles and smartphones.

But how exactly does the HCA codec aid Square Enix in their sound development? Well, we had a chance to speak with Tomohiro Yajima and Akihiro Minami from Square Enix’s sound department about the company’s history of sound development as well as how they put the HCA codec to use.

About CRI Middleware:

CRI Middleware offers sound and video middleware to game developers. Gamers will probably recognize them by their blue CRIWARE logo that appears in over 6,500 games that already use their technology.

――Please introduce yourselves and tell us a little about what your jobs entail.

Yajima:

My name is Tomohiro Yajima. I currently work as general manager of the sound department, although I originally began my career as a sound designer. I first started working in the industry around the time of the original PlayStation console, and was in charge of the development and implementation of new sound systems.

Minami:

My name is Akihiro Minami. I attended a video game vocational school and was eventually given a position as a programmer here in the sound department after graduating. The first project I worked on was Final Fantasy XIII and have been involved in the series’ development since then. My main responsibilities center around sound driver programming and authoring tools, but I deal with a number of other sound-related tasks as well.

――When it comes to sound-related work in video games, the main focus tends to be on creating the background music and sound effects, but today I’d like to go deeper and talk about the foundational work required for those aspects of the creation process. For starters, how many programmers like Minami work in the sound department?

Yajima:

It’s difficult to give concrete numbers, but I would say around half of the sound department works mainly on background music and sound effect creation. I’d estimate a little over 10% of the team works as sound programmers.

――What exactly does the job of a sound programmer entail?

Minami:

I think understanding what goes into the creation of background music and other in-game sounds is relatively easier for players to grasp. When it comes to what we do as sound programmers, however, that is a bit harder to explain. Ordinarily when the development of a new game begins, one of the first steps is incorporating sound drivers into the project and explaining how they function. It’s important to explain things to the sound designers working on any given project in a way that helps them easily perform their job, even if they don’t fully understand the technical aspects of how the drivers work. I’d equate it to how technical artists work as intermediaries during the development of a game’s graphics. There are often cases in which a game’s development team wants new features or functionality, so it would be our responsibility to update the software to account for such needs.

――With so many games being developed all the time, that sounds like quite a difficult job.

Minami:

That’s true. There are always new games in development, but the number of people doing this sort of work is somewhat limited. Still, that is all the more reason why we are committed to improving the quality of the support we offer while also keeping operating costs low. Not only does the sheer amount of sound-related resources needed for games increase year after year, the specs of the hardware those games run on are constantly improving as well. As new games try to take full advantage of these improvements, it has gotten to the point that simply throwing more manpower at the problem isn’t enough. It’s now much more important to focus on automatization and optimization.

Yajima:

We have been developing our own sound drivers for quite some time now. While the methods of game development change over time, it wasn’t always possible to simply use the tools in a GUI to insert sounds into a project. Each console’s hardware is unique, requiring different references for audio playback to function properly. This generally required programming to be done separately for each console. Not only that, but even different games running on the same hardware would often require different drivers.

――Square Enix has been using their own in-house sound system for a long time now. When did you first begin implementing the HCA codec, and how did that change come about?

Yajima:

As I mentioned before, we had been developing our own drivers for many years. During the PlayStation 2 and Xbox generation, games slowly began moving toward being multi-platform, and ever since the latter half of the PlayStation 2’s prime, the number of available game systems has only continued to grow. If we continued developing drivers as we had been, tailoring each one to a specific game, there was no way we could have maintained compatibility between so many platforms.

――I see. So reworking your driver designs was a direct result in the increase of gaming platforms. Multi-platform games are essentially the standard now, but that must have been quite the headache for developers back then.

Yajima:

It sure was, which is why we decided to switch to an all-in-one solution. We wanted sound drivers that would allow us to respond quickly in the event we received requests to assist with porting a game from one console to another. The most important step was figuring out a codec that could act as a base. At the time, there were a number of standard audio codecs in use, including the mp3 and ADPCM formats. PlayStation also used ATRAC, while Xbox used XMA. This meant that when a game was ported to a different system, you first had to convert all the audio from one format to another.

However, games such as those in the Final Fantasy series were already using as much memory as a system could handle, meaning any increase in the size of the audio files could lead to the game not running properly. So what was this all-in-one codec that could be used regardless of the hardware? Well, that would be the Ogg file.

――I understand that, compared to formats like mp3 and ADPCM, Ogg files have a higher compression ratio, but still manage to maintain a similar sound quality. It’s also a patent-free file type. You never ended up implementing that file type, though, isn’t that correct? What exactly happened?

Yajima:

The fact that the format is open source also means that if we ever ran into an issue, there was no organization that could take responsibility for fixing said problem. We decided that if we wanted an all-purpose sound driver that we could rely on for many years to come, it would be best to use one that had a better form of accountability.

Minami:

Take, for example, how background music tracks are often played on a loop. If we wanted to do something a little more complicated, however, such as loop only a small sample of that track, doing so with the Ogg file type was quite difficult. The verification process was much more involved as well, meaning that it was necessary to be extra cautious about adopting the file type.

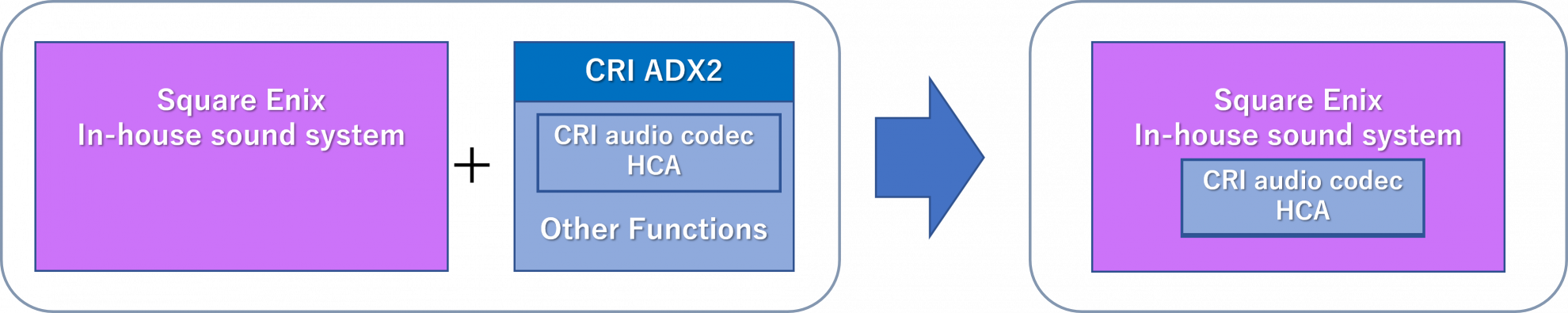

Yajima:

That’s when we discovered the HCA codec developed by CRI Middleware. We knew they had a format called CRI ADX*1 which supported multi-platform use, so we decided to inquire about their current codec. We already had an in-house tool at our disposal, so we ended up licensing just the codec and began implementing it in our projects. At the time, finding a codec that not only supported all platforms, but was also developed and maintained by an official entity that could take responsibility for troubleshooting was quite rare. We could not have been more pleased.

*1 The CRI ADX file format is sound middleware provided by CRI Middleware. It offers game developers the ability to easily implement audio in a variety of ways.

――Did you ever consider doing things in-house, perhaps having Minami develop a brand-new codec?

Minami:

We did look into the possibility, but after investigating the technologies involved and realizing how many patents were already held by others, we determined there were too many obstacles in the way to make it worth our time. We could either spend possibly years developing a new codec, or use one that we knew had been created by a reliable source. We went with the latter.

Yajima:

Codecs are inventions, and inventing something truly unique is always difficult. The moment you try to imitate someone else’s idea, you’re likely to run into patent problems. I remember asking Minami at one point, “Can’t you just make something original?” to which he responded, “Not a chance!”

――Did the implementation process go smoothly once you made the decision to change?

Minami:

It did. We were a bit nervous before receiving the API software, but after we did, it only took about two or three days before we got to the point where we could encode audio that our drivers could then play back using the HCA codec. Their API was simple and easy to use, so the entire process went quite smoothly.

――Are there any advantages or ideas that were made possible because of the HCA codec?

Yajima:

Let’s use Dragon Quest XI: Echoes of an Elusive Age as an example. The entire game uses the HCA codec, which means the compression ratio and sound quality is kept uniform. One example of an advantage this offers is the ability to guarantee that the sound quality of any musical compositions we commission from third parties will be consistent, regardless of the hardware it’s being played on. If we had used different codecs for each piece of hardware, it would have required that the sound quality be checked separately for every codec being used. Using a single codec instead significantly reduces the time and work necessary for quality control.

――Dragon Quest XI certainly was released on a large number of platforms. What sorts of things did the sound team pay close attention to?

Yajima:

After adding audio to the game, the team would check whether everything sounded and felt right. Making sure the sounds properly resonated with the mood of the game was a priority. For example, battle music is composed specifically to be used during encounters, so having it play during a cutscene would feel out of place. However, there are some cutscenes that lead directly into a fight. In a situation like that, it might feel more natural to have the battle music start during the cutscene rather than forcing a sudden transition. We often discussed which audio tracks should be used in which scenes with Koichi Sugiyama, composer of the music for the Dragon Quest series, as well as the rest of the sound team. That has been something we’ve done for all the games in the series.

――Could you give us any insights about the development of Dragon Quest XI from a game engineering perspective?

Minami:

Dragon Quest XI is available on many platforms, including the Windows operating system, and while game console makers each have their own dedicated codecs, Windows does not. This means that without proper security measures, there was a high risk that our data could be easily extracted. The HCA codec, however, allows data to be encrypted without adding too much extra load on the processor, enabling us to properly protect our data. The team working on the Dragon Quest titles are devoted and take great care in their work on the series. From a game engineer’s perspective, the ideal situation is to be able to receive an entire library of assets which can be used to immediately get to work creating said game. In that sense, the technical aspects aren’t too overly complex.

Yajima:

Final Fantasy VII Remake pushed hardware specs to their limit. Until the PlayStation 2 generation, hardware memory limitations were very tight. This meant we had to make sure to properly calculate the necessary processing power so as not to overtax the streaming buffer*2. Starting with the PlayStation 3, however, hardware specs have increased significantly. The PlayStation 4 in particular gave us a lot of extra room.

*2 The streaming buffer is where a certain amount of sound data can be temporarily stored before it begins playing to avoid skipping.

Minami:

Final Fantasy VII Remake used a significant amount of memory and CPU power for things like character AI, physics simulation, and video rendering, forcing us to pay closer attention to sound design. The game also has a lot of dialog, making it necessary to carefully calculate how much space the audio data would require and choose how much compression should be used.

――Final Fantasy VII Remake was certainly at the forefront, with its cutting-edge graphics and interactive music*3. With its use of so many advanced techniques, paired with the fact it was available for multiple platforms, it must have been extremely difficult to develop.

*3 Interactive music is the ability to have aspects such as the speed and tone of music tracks seamlessly transition in response to in-game conditions.

Yajima:

Development limitations for some games can be quite strict. A team may come to us and ask, “Can you give us another option, even if it only reduces load by 1%?” In those cases, we might decide it would be faster to use the decoders built into the hardware running the game.

Minami:

In the case of the PlayStation 4 version of Final Fantasy VII Remake, it was necessary to use the faster hardware codecs built into the system. However, with the Windows version, we used the HCA codec, as the operating system does not have dedicated codecs of its own. Having the flexibility to choose in this manner is another big advantage.

――Having options is certainly a plus. Were there any scenes from Final Fantasy VII Remake that were particularly difficult to design?

Minami:

To be honest, there were quite a few, but one that stands out was the very first boss fight. I remember spending a significant amount of effort in finding a good balance between the interactive music and the sound effects for that scene.

Yajima:

The same is true for past series as well, but we always make sure everything from the end of the opening tutorial to the end of the first boss battle is polished to a shine. Those first scenes are like a foundation which the rest of the game’s development expands upon, so the programmers pay special attention to them. With Final Fantasy VII Remake, I’m told the very beginning where all the soldiers keep appearing was rather difficult.

Minami:

Of course, everything eventually comes together at the end, but the techniques used as the foundation for the development process were essentially completed in earlier titles such as Final Fantasy XV. As a result, I feel as if some of the most difficult parts were actually coming up with ideas on how to improve those systems. I mentioned earlier in the interview the need for automation. Well, the sound effects for things like the rustling of clothes are all generated from the animations, and that function was expanded upon to make it possible for that auto-generation to work both during cutscenes and actual gameplay.

――Does that mean you can use auto-generated sounds for things like fabric rustling during cutscenes instead of having to add them manually during post-production?

Yajima:

Slow-motion scenes are of course an exception, but yes, these sounds can be generated during cutscenes as well. The previous version of this system only responded to arm and leg movements, but the current version is able to reference an entire character model. This gives the system the ability to play sounds that more accurately reflect very specific actions, such as a character falling on their back.

The team also discusses whether aspects of the interactive music feel right or not. While both the programmers and creators are going to have ideas on how things can and should be handled, neither is given unilateral control over the other. Instead, they must work together to find a compromise that will lead to an ideal middle ground that works for everyone, and which no one has experienced before. As a sound programmer, Minami’s job requires that he take the sound creators’ opinions into consideration, and vice versa.

――Could you share any examples of other existing titles that use the HCA codec?

Yajima:

As far as multi-platform titles go, the HCA codec was used to help us release Actraiser Renaissance for Nintendo Switch, PlayStation 4, Steam, and smartphone. The music for this title was composed by Yuzo Koshiro, and using a different codec for each platform would have made it difficult to maintain the quality of his compositions. Of course, taking each hardware’s capabilities into consideration and mixing the music pieces accordingly is always an option, but that wasn’t the route we wanted to take with Actraiser Renaissance. We would much rather prefer a method that allows us to uniformly deliver a composer’s work to all players.

When a title is released on multiple platforms at the same time, it’s likely the consumer is going to purchase the version for whichever console or system they tend to use most often, even if they personally own multiple systems. Now let’s say, for example, this person goes over to a friend’s house and plays that same game on a different system. If they notice the sound is better or worse than the experience they had at home, how would that make them feel about the service they had been provided? Those are the sorts of questions we consider.

Another thing to keep in mind during the development process is the speed of HCA encoding. Say for example we decided to add a slight reverb to all the voice lines for a specific character in a game. Doing so would require us to re-encode all of those audio files. Now, is that something that can be taken care of over a short work break, or will it take an entire night to finish? The extra time required can add up quickly and make a big difference in production time, especially during the final stages of a game’s development. The ability to test more iterations in a short time is a huge benefit.

――Are there any other benefits to using the HCA codec that you haven’t mentioned yet? What about from a budgetary perspective?

Yajima:

Without a base codec to rely on, we would have probably ended up using either the Ogg file type or the codecs built into each individual platform. If we were to do that, however, each platform-specific problem that arose would have to be dealt with individually. With the number of projects we manage, how many people would we need to devote simply to maintenance roles? And if a large-scale problem ever arose, it’s plausible we would have to make the call to put the sound development on hold. Using the HCA codec provides a certain peace of mind. If anything happens, there is a dedicated support structure in place, which means fewer people are needed for maintenance tasks. This saves on costs and keeps the entire process more reliable.

Minami:

The codec is highly reliable, so we can be fairly confident it can be used with the games we develop, allowing us to keep costs down. A different codec might take longer to read data, or perhaps require that data to be decoded beforehand, limiting its usefulness in a game. The HCA codec is very useful in the fact that it can calculate a sample’s position within the data without these same problems. It also supports gapless looping*4 as a standard feature, whereas it was always necessary before to handle such things on a case-by-case basis. I was very happy when I first learned it could be used in this way.

*4 Gapless looping is the ability to loop sounds seamlessly with no discernable gap in audio.

――Thank you for providing us a glimpse into how you use the HCA codec and some examples of its practical applications in game development. We’re looking forward to seeing what else you have planned going forward.

■CRIWARE

CRIWARE consists of audio and video solutions for a multitude of platforms including smartphones, game consoles, and web browsers. More than 6,500 titles are powered by their technology. For more information, visit their official website.

© 2017,2020 ARMOR PROJECT/BIRD STUDIO/SQUARE ENIX All Rights Reserved.

© 1997, 2021 SQUARE ENIX CO., LTD. All Rights Reserved.

CHARACTER DESIGN: TETSUYA NOMURA/ROBERTO FERRARI

© 1990, 2021 QUINTET/SQUARE ENIX CO., LTD. All Rights Reserved.

© YUZO KOSHIRO