Project SEKAI COLORFUL STAGE! feat. Hatsune Miku is a rhythm and adventure game developed by Sega and Colorful Palette. Boasting songs by famous Vocaloid creators, the game was initially launched in September 2020 for mobile platforms. This rhythm game is not just about the music – it also focuses on delivering an engaging story through eye-catching visuals.

The expressive character models that appear in Project SEKAI are created using Live2D Cubism, a middleware for 2D animation production. Why did the game’s developers choose Live2D over the many other 2D animation technologies available? To find out, we interviewed Makoto Fujimoto of Colorful Palette, who has been an active member of the animation team since before the launch of Project SEKAI and currently serves as the title’s animation director.

Live2D Cubism has a free version that can be used indefinitely, as well as a PRO version starting from $14.57 a month.

―To start things off, please introduce yourself.

Makoto Fujimoto (Fujimoto hereafter):

I’m Fujimoto, the director of Project SEKAI’s animation team. I joined Colorful Palette in 2019 and have been directing story scenes and Live2D-animated scenes since before Project SEKAI’s launch.

―So, you’ve been involved in the production of the game’s Live2D animation for a long time. Live2D is used to depict characters in the story parts of Project SEKAI, but what does the process of creating the characters look like?

Fujimoto:

The first step in the process is to draw up assets based on the scenario. The scenario team first creates a scenario and decides which characters and units will appear in it. The assets are worked out once the scenario has taken shape. Next, the illustration team handles everything from design to coloring, while the animation team and outsourced staff take care of the partitioning and modeling. After the main text for the scenario has been completed, the animation and story scripting teams go back to working on the necessary assets, motions and facial expressions in-house, based on the dialogue and detailed descriptions. The three teams work together to create the necessary motions and facial expressions while carefully going over the scenario.

―I see, so the three teams work in parallel.

Fujimoto:

That’s right. Once the dialogue has been written, the scenario team may ask for a particular new facial expression, or for an existing motion to be modified to provide a more dramatic effect. In such cases, additional assets that need to be made sometimes come up at the model animation stage.

―I see, so there are times when additional assets are added on later. I get the impression that adding new assets at a late stage is generally undesirable in game development. Do you have any measures in place to reduce the number of additional orders for assets?

Fujimoto:

When it comes to additional asset orders, I place importance on assessing whether the asset really needs to be made from scratch or whether it can be derived somehow from existing assets. If they are adjustments or simple assets that can be made without asking the illustration team, the animators may create them themselves.

Expressing characters’ emotions in detail is a central aspect of Project SEKAI. It’s what we value as a company as well, so everyone is willing to constructively consider each other’s ideas if they are conveyed enthusiastically.

―So, it’s the kind of teamwork that’s possible because everyone loves the game.

Fujimoto:

At Colorful Palette, we hold scenario reviews and debugging meetings, and we have a culture of sharing opinions not only within each department but as a whole. By listening to the opinions of staff members who each have their own favorite characters, we can create motions and expressions that will please the players who love the characters just as much.

―The story parts of Project SEKAI are then completed by incorporating the finalized models into Unity.

Fujimoto:

Additional motions and facial expressions are created by the animation team and incorporated into Unity, along with the models, to turn them into assets. Once the assets are embedded, the story scripting team uses them to create the actual story scenes.

Why use Live2D?

―There are various 2D animation programs out there. Could you tell us why you chose to use Live2D?

Fujimoto:

Because it is a tool that allows us to achieve the level of expressiveness that we want without compromising the feel of the original illustrations. Once you have set up the initial settings, it is possible to very precisely create fine changes in facial expressions and motions. We previously tried out various tools, but found that Live2D was the most suitable for subtly controlling facial expressions using parameters.

―I see. You mentioned that you also use 2D animation tools from other companies. What do you use them for?

Fujimoto:

Project SEKAI has “Area Conversations” in which chibi characters appear, so we use the other 2D animation tools to animate the characters in these parts of the game. The Area Conversations are meant to depict casual chit-chat between the characters, which is why we use different tools for them.

―So, you use different tools depending on what you want to depict.

Fujimoto:

We use Live2D to adjust parameters such as eyes and lips in 0.1 increments, allowing for truly subtle emotional expressions. Live2D is a great tool for expressing emotions such as sadness (shedding big tears), rage, etc., which is why we use it in the story parts of the game. In Project SEKAI, we have numerous facial expression variations just for smiling, and it’s important for us to be able to quickly create new expressions by simply manipulating the parameters.

―I see, so, because the eyes, mouth, and eyebrows have separate parameters, it is easy to create new expressions by combining them.

Fujimoto:

That’s right. In Project SEKAI, new facial expressions are often added for each event, and existing assets are used to create a mesh that can be modified by a few pixels at a time. By combining parameters, we can create an infinite number of facial expressions. It is also easy to add extra assets when the need arises. In this sense, I feel it’s a very convenient tool.

―As a creator, what kind of tool would you say Live2D is?

Fujimoto:

I would say that it’s a tool that allows creators to realize anything they want depending on their creativity and ingenuity. Since there is no set way of expressing things, anything is possible depending on the way you play with it. Of course, system load can be a problem, but if you want to make a hundred different expressions, this tool will allow you to do so..

―Although the usage regulations are different to that of game production, I feel like VTuber modelers are also making big strides using Live2D.

Fujimoto:

In game production, you have to think about the game engine and other factors, so I think it requires a different approach, but one point in common with VTuber models is the high expandability. It is easy to create new costumes, new motions, and other expressions to your liking. It’s also nice that you can do the whole process within Live2D, if you use it in an ingenuous way.

Updating Live2D allowed for new and improved models

―Tell us about model creation. What are your regulations for in-game models in Project SEKAI

Fujimoto:

We try to keep the polygon count of the mesh around 10,000. We also have assets for deformers, and established standards based on that. However, the standards are only guidelines – our staff don’t have to strictly adhere to them.

―So, you kind of prioritize making what you envisioned?

Fujimoto:

Yes, that’s right. Project SEKAI was released in 2020, and our current regulations are based on the specifications that we obtained through load testing at that time. We set the recommended number of polygons and deformers to a certain level, so that even if we were to design more complex costumes and hairstyles, we would have enough leeway in terms of load.

At the time, we also set a limit of up to two models on the same screen, but we later changed this to up to three models. As device performance improves, we regularly update our regulations to improve the quality of the story scenes, while making sure to test load each time.

―I see, so advances in device performance have allowed you to express a wider range of things in game. Have updates to Live2D itself ever enabled you to do more in Project SEKAI?

Fujimoto:

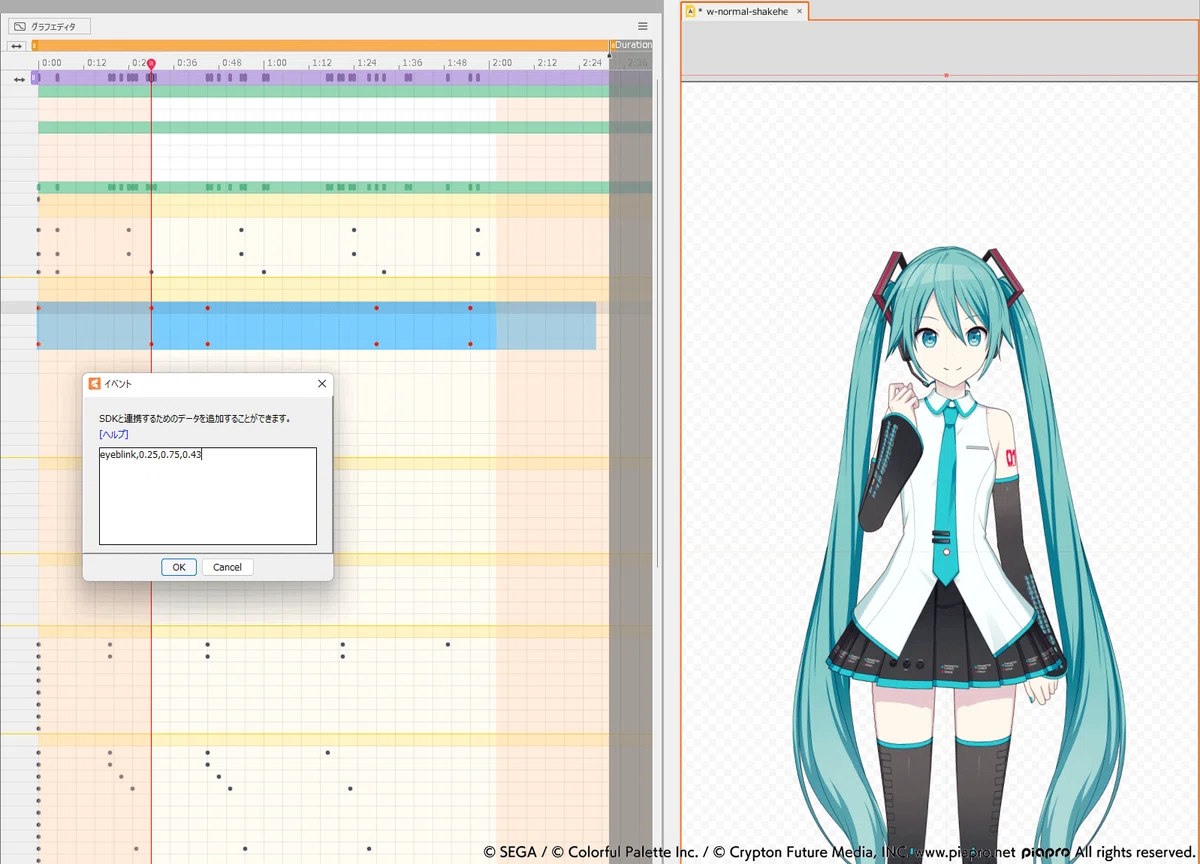

In game development, there’s also an SDK version (of Live2D Cubism), and it’s quite difficult to keep up with updates. However, we decided to update to the SDK version to coincide with the third anniversary of Project SEKAI. After consulting with our engineers, we made this decision because the anniversary was a good time to revamp the UI and give players a new experience, which includes trying out new things in future story scenes.

―So, you took the plunge and decided to update. What has improved since then?

Fujimoto:

With the previous version, I felt the range of motion was a bit limited when it came to posing the characters. But after we updated to Live2D Cubism 4 SDK, we were able to use the art path and mask inversion functions, which allowed us to improve both expressiveness and range of motion. One of our basic principles for Project SEKAI is that we actively consider introducing any function required to better express our vision, and our decision to update to the SDK version was based on this way of thinking.

―Did you have any issues due to the update, though?

Fujimoto:

There were many problems! For example, when we drew the models in the new version, they would look fine in the editor, but the blink timing would be incorrect when we viewed them on the actual device. There were many other minor problems like that. It was quite difficult to identify these inconsistencies, so I was constantly working on the models and tinkering with them for about two months prior to the third anniversary.

―With there being so many models in Project SEKAI, it must be a tremendous task to check each one to see if it works with the new version. Did you revamp all the models when you updated to SDK version?

Fujimoto:

For the game’s 3rd anniversary, we changed all the characters’ default costumes. So, we decided to make new default models (with the new costumes) for the new version of the game. If we had modified the old models, we would have risked destroying the animations we had made for them, so we decided to keep past stories as they were and produce new stories with the updated version.

―I see. It would have been even more difficult to do the upgrade if you had had to make compromises with the existing models.

Fujimoto:

We did our best to keep the game’s expressiveness intact and please our eagle-eyed fans who notice subtle changes. I saw users on X reacting to the updates, which is when I realized that people pay really close attention to our work.

The dedication to detail in Project SEKAI

―In creating Live2D animations, are there any techniques or things you pay attention to that are unique to Project SEKAI?

Fujimoto:

We set the overall value for blending pose transitions rather low so that they occur slowly, and we give hand animations distinct fingertip movements to create a lingering feeling at the end of each motion. For example, a soft motion like placing a hand on a cheek will end with a subtle movement of the fingertips. On the other hand, for intense movements like a character clenching their fist, the motion extends right to their tensed fingertips. By adding small finishing touches like these, we can make the scenes more emotionally expressive.

―They say that “God is in the details.” The way you talk about the movement of fingertips reminds me of that. Is there anything that you use as reference for motions and expressions?

Fujimoto:

While there’s a difference between real-time tracking and animation, VTuber models can provide a very clear source of reference in terms of Live2D. I also refer to works published by Live2D creators on X (formerly Twitter), as well as videos made by regular users. Also, Live2D JUKU (the official Live2D online course) has a lot of useful information.

As I mentioned earlier, Live2D is a tool that allows creators to realize anything they want depending on their creativity and ingenuity. I believe that input is the first step in learning, so I try to watch whatever videos are popular.

―I’m sure that many of you actively try to improve your skills on your own, but do you also study together as a team?

Fujimoto:

At Colorful Palette, we do not divide responsibilities between modelers and animators. Basically, those who are involved in Live2D get to experience the full workflow from modeling and animating to embedding. Also, we sometimes share knowledge and ask questions to others in the CyberAgent group when we have problems.

―Thank you very much. Finally, do you have a message for Project SEKAI’s players?

Fujimoto:

As the characters grow and each unit progresses, there will be numerous new scenes depicting conflict, joy, and various other emotions. Although there may not be much that stands out in terms of Live2D, if you pay close attention to the minute facial expressions, as well as subtle changes in movement and direction, I think you’ll be able to enjoy the game from a new perspective. We will continue to do our best to bring you wonderful stories and characters, and we appreciate your support.

―Thank you for your time!

Project SEKAI COLORFUL STAGE! feat. Hatsune Miku is available for iOS and Android. The game’s story sequences are created using Live 2D Cubism, which has a free version that can be used indefinitely, as well as a PRO version starting at $14.57 a month.

© SEGA / © Colorful Palette Inc. / © Crypton Future Media, INC.

www.piapro.net All rights reserved.