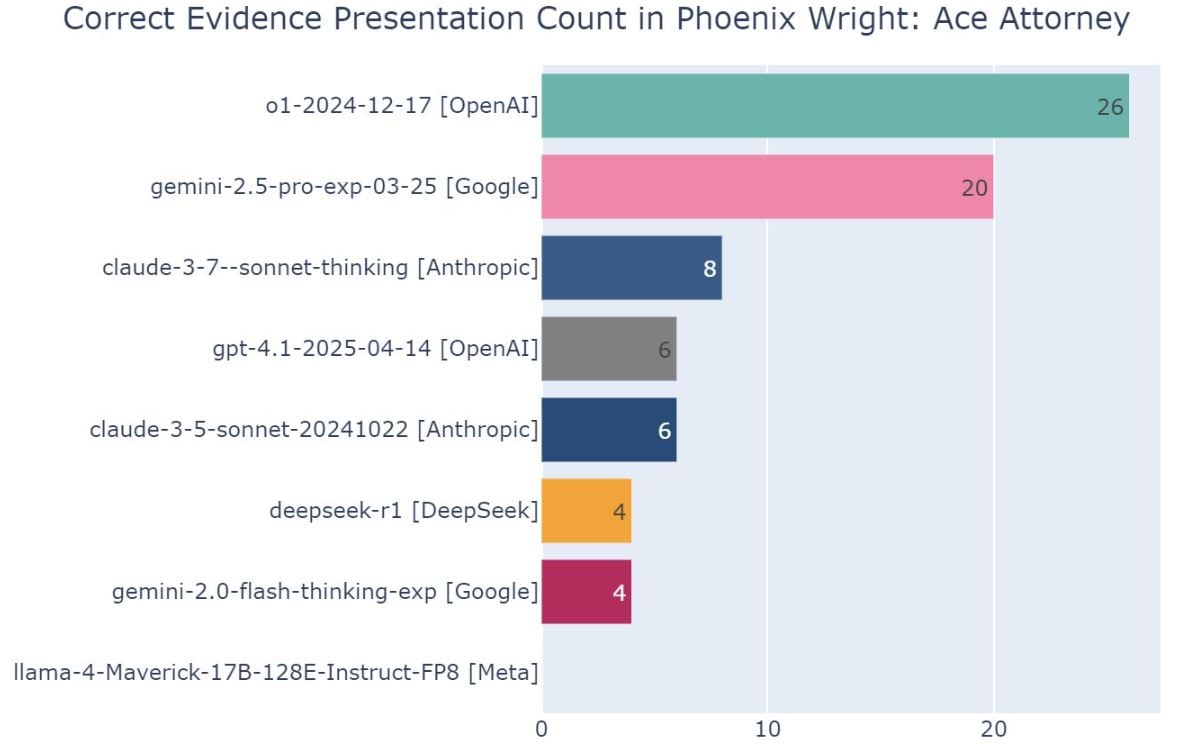

A machine learning research organization called Hao AI Lab recently published results of an unusual test meant to evaluate the reasoning skills of popular AI models. What’s peculiar about it is the benchmark they chose for the test – Capcom’s Ace Attorney. The researchers had AI models like OpenAI-o1 and Gemini 2.5 Pro play Phoenix Wright: Ace Attorney, the first game in the franchise, to evaluate their ability to spot contradictions and present correct evidence in trials.

According to Hao AI Lab, the reason why Ace Attorney acts as such a good benchmark for evaluating AI’s capabilities is because it tests not only memorization, but multiple complex skills – long-context reasoning (spotting contradictions by cross-referencing with prior dialogue and evidence), visual understanding (identifying the exact image that disproves false testimonies) and strategic decision-making (deciding when to press a witness, present evidence, or hold back).

Hao AI Lab evaluated four AI models in this way – OpenAI’s o1, Google’s Gemini 2.5 Pro, Anthropic’s Claude 3.7 Sonnet (extended thinking mode), and Meta’s Llama-4 Maverick. To start from the conclusion, none of the AI models managed to win all five trials in the game, with Llama-4 Maverick bombing the first episode and Claude 3.7 Sonnet getting a game over in the middle of the second episode. On the other hand, OpenAI and Google’s AI models managed to make it to episode four, but neither won the trial. You can watch a timelapse of their struggle in this side-by-side comparison.

Interestingly, an actual Ace Attorney developer reacted to the whole thing. Masakazu Sugimori, who created the OSTs for the first two mainline Ace Attorney games and voiced Manfred von Karma, expressed surprise at the game’s unexpected application on X.

“How should I put this, I never thought the game I worked on so desperately 25 years ago would come to be used in this way, and overseas at that (laughs).

That said, I find it interesting how the AI models get stumped in the first episode. Takumi and Mikami were very particular about the difficulty level of Episode 1 – it’s supposed to be simple for a human. Maybe this kind of deductive power is the strength of humans?”

In a follow-up post, Sugimori expands on his comment about Ace Attorney director Shu Takumi and executive producer Shinji Mikami. “The reason why Takumi and Mikami were so particular about balancing the difficulty level of Ace Attorney’s first episode was because ‘there was no other game like it in the world at the time.’ It had to be a difficulty that would be acceptable to a wide playerbase, but it had to avoid being insultingly simple too. They were going for the kind of difficulty that gives you a sense of satisfaction when the solution hits you.” Much like its creators intended, Ace Attorney’s first episode is the definition of beginner-friendly, so AI clearly still has a long way to go.

While he seemed overall positive about video games playing a role in the evolution of technology, Sugimori also commented “at the same time, I think we humans won’t lose to AI. While we may lose when it comes to performing individual tasks, the role of humans is to do the thinking, or rather, the directing.”