Bandai Namco Research has made a portion of its 3D motion datasets available for free on GitHub. They are meant to be used for AI (e.g., machine learning, classifications of deep neural network) research and development, and can’t be used for commercial purposes.

Bandai Namco Research is a member of the Bandai Namco Group that collaborates with other companies and research institutes in various fields, including the metaverse, xR (cross reality), AI-based speech synthesis, VR motion capture, and a virtual DJ/interactive virtual performance system (BanaDIVE AX).

Most CG characters in modern video games are created using motion captures or handmade by specialists. But as the scale of content development continues to expand (including the metaverse) and the need to create an enormous quantity of characters and motion variations arises, conventional means of production are expected to reach their limits. That’s why they’ve been researching new ways to generate 3D motion graphics using the power of AI-related technologies.

That said, such research requires datasets of various motion patterns, which are hard to come by. To help make progress in this field of study (as the industry as a whole), they’ve decided to make a portion of their datasets open to the public. Here’s the description of the datasets available on GitHub:

There is a long-standing interest in making diverse stylized motions for games and movies that pursue realistic and expressive character animation; however, creating new movements that include all the various styles of expression using existing methods is difficult. Due to this, Motion Style Transfer (MST) has been drawing attention recently, which aims to convert the motion in a clip with a given content into another motion in a different style, while keeping the same content. A motion is composed of a content and style, where content is the base of the motion and style comprises of the attributes such as mood and personality of the character tied to the motion.

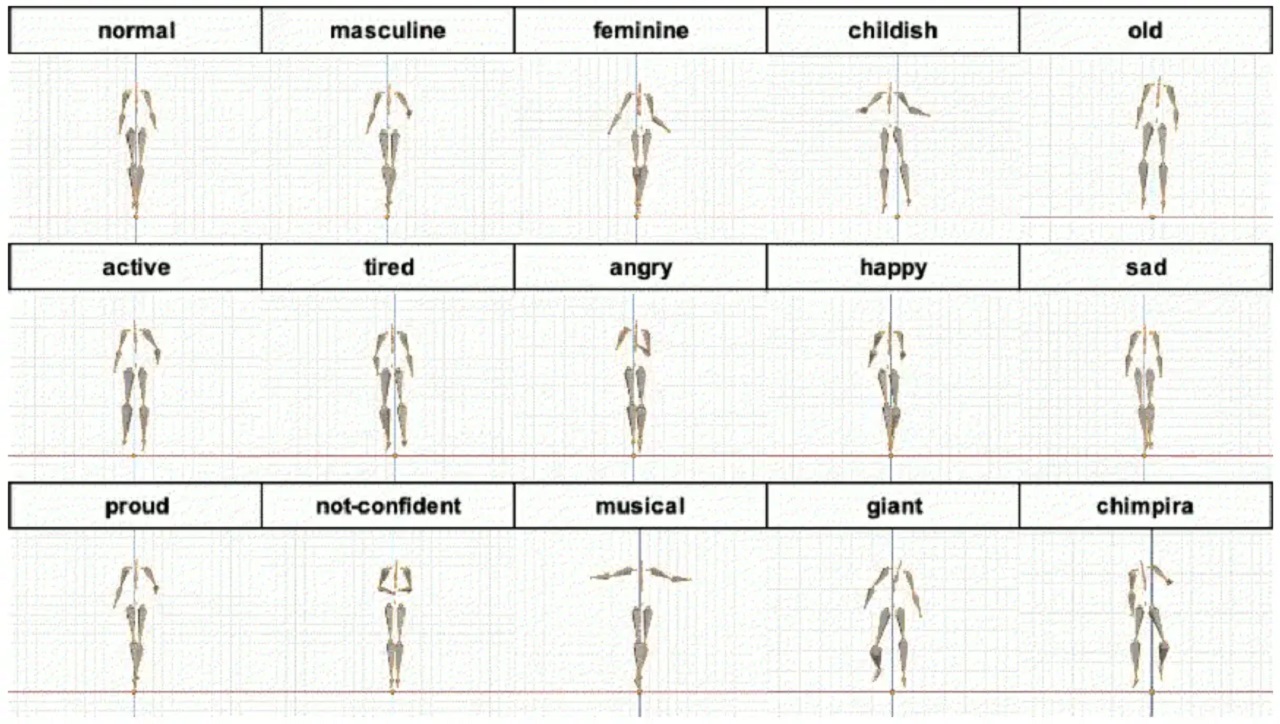

The datasets contain a diverse range of contents such as daily activities, fighting, and dancing; with styles such as active, tired, and happy. These can be used as training data for MST models. The animation below shows examples of visualized motions.

Bandai-Namco-Research-Motiondataset-1 (Details)

-17 types of wide-range contents including daily activities, fighting, and dancing.

-15 styles that include expression variety.

-A total of 36,673 frames.

Bandai-Namco-Research-Motiondataset-2 (Details)

-10 types of content mainly focusing on locomotion and hand actions.

-7 styles that use a single, uniform expression.

-A total of 384,931 frames.

License

The datasets and scripts are available in the following licenses.

Bandai-Namco-Research-Motiondataset-1: CC BY-NC-ND 4.0

Bandai-Namco-Research-Motiondataset-2: CC BY-NC-ND 4.0

Motion visualization on Blender: MIT

The official website of Bandai Namco Research can be found here.